An Introduction to the Jena API

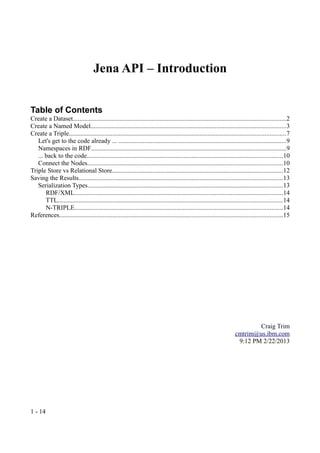

- 1. Jena API – Introduction Table of Contents Create a Dataset....................................................................................................................................2 Create a Named Model.........................................................................................................................3 Create a Triple......................................................................................................................................7 Let's get to the code already ... ........................................................................................................9 Namespaces in RDF.........................................................................................................................9 ... back to the code.........................................................................................................................10 Connect the Nodes.........................................................................................................................10 Triple Store vs Relational Store..........................................................................................................12 Saving the Results..............................................................................................................................13 Serialization Types.........................................................................................................................13 RDF/XML.................................................................................................................................14 TTL...........................................................................................................................................14 N-TRIPLE.................................................................................................................................14 References..........................................................................................................................................15 Craig Trim cmtrim@us.ibm.com 9:12 PM 2/22/2013 1 - 14

- 2. Introduction What's the point? I am having a hard time here. I get triples. I get that I want to work with a collection of triples. But what are the main, important differences between a Model, DataSource, Dataset, Graph, and DataSetGraph? What are their lifecycles? Which are designed to be kept alive for a long time, and which should be transient? What triples get persisted, and when? What stays in memory? And how are triples shared (if at all) across these five things? I'll take an RTFM answer, if there's a simple summary that contrasts these five. Most references I have found are down in the weeds and I need the forest view1. Create a Dataset How do I create a dataset? Dataset ds = TDBFactory.createDataset("/demo/model-01/"); A Dataset contains a default model A Dataset can likewise contain 0.. named models. A SPARQL query over the Dataset can be 1. over the default model 2. over the union of all models 3. over multiple named models 4. over a single named model A Jena Dataset wraps and extends the functionality of a DatasetGraph. The underlying DatasetGraph2 can be obtained from a Dataset at any time, though this is not likely to be necessary in a typical development scenario. 1 http://answers.semanticweb.com/questions/3186/model-vs-datasource-vs-dataset-vs-graph-vs-datasetgraph 2 Via this call: <Dataset>.asDatasetGraph() : DatasetGraph Read the "Jena Architectural Overview" for a distinction on the Jena SPI (Graph Layer) and Jena API (Model Layer) 2 - 14

- 3. Create a Named Model We're going to be loading data from the IBM PTI Catalog3, so let's create a named model for this data: Dataset ds = TDBFactory.createDataset("/demo/model-01/"); Model model = ds.getNamedModel("pti/software"); Triples always have to be loaded into a Model. We could choose to use the "default model" through this call: ds.getDefaultModel() : Model But it's good practice to use a named model. A named model functions as a "logical partition" for data. It's a way of logically containing data. One advantage to this approach is query efficiency. If there are multiple models, any given model will represent a subset of all available triples. The smaller the model, the faster the query. Named models can also be merged in a very simple operation: model.add(<Model>) : Model You can merge as many models as you want into a single model for querying purposes. Think of models like building blocks. A dataset might contain four models: D 1 2 3 "D" represents the "Default Model". If you prefer not to work with named models, then you would simply make this call: Model defaultModel = ds.getDefaultModel(); The default model always exist (default) in the Dataset4. It will exist even if it is not used. I 3 http://pokgsa.ibm.com/projects/p/pti/consumer/public/test/sit/ 4 The graph name is actually an RDF Node. A named graph is like a logical partition to the dataset. Some triples belong to one named graph; other triples belong to another named graph, etc. Behind the scenes, Jena is creating a "Quad". Recall that a triple is: Subject, Predicate, Object A quad is: Subject, Predicate, Object, Context 3 - 14

- 4. recommend ignoring the default model and focus on creating named models. Note that a named model will not exist until you create it: Model model1 = ds.getNamedModel("M1"); Model model2 = ds.getNamedModel("M2"); Model model3 = ds.getNamedModel("M3"); // etc ... When you make a call to the "getNamedModel", the model will be located and returned. If the model does not exist, it will be created and returned. A query could be executed against the entire dataset: Model union = ds.getNamedModel("urn:x-arq:UnionGraph") D 1 2 3 This method call is computationally "free". Jena simply provides a model that contains all the triples across the entire dataset. A query could be executed against certain models in the dataset: A quad functions just like a triple, with the addition of a context node. In this case, every triple we create in this tutorial (just one in this example) will have an associated "4th node" - this is the node that represents the named graph. Note that when you write a triple to the default model, you are creating a triple. There is no "fourth node" in this case. Quads only apply where named models are present. Consider this triple Shakespeare authorOf Hamlet: If we request Jena to add this triple to the named model called "plays", a Quad will be created that looks like this: Shakespare authorOf Hamlet +plays If we request Jena to add this to the default model, it will look like this: Shakespare authorOf Hamlet Each quad is stored in the DatasetGraph. Rather than using a more complex containment strategy, this is simply a method of "indexing" each triple with a fourth node that provides context. Note that this is an implementation of the W3 RDF standard, and not a Jena-specific extension. This does not affect how you have to think about the "triple store", nor does it affect how you write SPARQL queries. The SPARQL query: ?s ?p ?o will work the same way against a named model (quads) as it will against a default model (triples). 4 - 14

- 5. D 1 3 2 3 1 Model merged = ds.getNamedModel("M1").add( ds.getNamedModel("M2")); Such a model can be either persisted to the file system if necesssary. Let's return to our original code. Changes to the dataset (such as writing or deleting triples) are surrounded with a try/finally pattern: Dataset ds = TDBFactory.createDataset("/demo/model-01/"); Model model = ds.getNamedModel("pti/software"); try { model.enterCriticalSection(Lock.WRITE); // write triples to model model.commit(); TDB.sync(model); } finally { model.leaveCriticalSection(); } This pattern should be used when data is inserted or updated to a Dataset or Model. There is a performance hit to this pattern. Don't use it a granular level, for each and every update and/or insertion. Try to batch up inserts within a single try/finally block. If this try/finally pattern is not used, the data will still be written to file. However, the model will be inconsistent, and iteration over the model could provoke a "NoSuchElementException" when querying the model, as the model has is basically inconsistent. 5 - 14

- 6. Create a Triple Predicate (Property) Subject Object (Resource) (RDFNode) When using the model layer in Jena, a triple is composed of: an instance of Resource the Subject an instance of Property the Predicate an instance of RDFNode the Object Illustration 1: Class Hierarchy The object of a triple can be any of the three types above: 1. Resource (extends RDFNode) 2. Property (extends Resource extends RDFNode) 3. Literal5 (extends RDFNode) 5 For example: a String value, such as a person's name a Long value, such as a creation timestamp an Integer value, such as a sequence 6 - 14

- 7. The subject of a triple is limited to either of: 1. Resource (extends RDFNode) 6 2. Property (extends Resource) etc 6 It is possible to make assertions about properties in the form of a triple. For example, if we create a predicate called "partOf" we might want to make this a transitive property. We would do so by creating the triple: partOf rdf:type owl:TransitiveProperty On the other hand, such an assertion could be dangerous: finger partOf hand partOf body partOf Craig partOf IBM which might lead one to believe: finger partOf IBM (perhaps this is true) 7 - 14

- 8. Let's get to the code already ... The following code will create three disconnected "nodes" in Jena. String ns = "http://www.ibm.com/ontologies/2012/1/JenaDemo.owl#"; Resource subject = model.createResource( ns.concat("Shakespeare")); Property predicate = model.createProperty( ns.concat("wrote")); Resource object = model.createResource( ns.concat("Hamlet")); Namespaces in RDF Note the use of ns.concat("value") Every resource in the model (should) be qualified by a namespace. This is pretty standard when dealing with data – not just RDF. The reasons we might have for qualifying a resource with a namespace in a triple store are the same reasons we might have for qualifying a resource with a namespace in XML. The use of a qualified name helps ensure a unique name. You might have to merge your triple store with a triple store that you found online, or from another company. Two resources may have the same name, and may even (conceptually) have similar meanings, but they will not necessarily be used the same way. One developer might assert that the use of "partOf" is transitive. Another developer might assert that the use of "partOf" is not transitive. Both properties mean the same thing, but clearly you would want to have these properties qualified with namespaces, so that the correct property could be used for each situation. For example, let us assume that ns1:partOf rdf:type owl:TransitiveProperty and that ns2:partOf is not transitive. We could then correctly model this scenario: finger ns1:partOf hand ns1:partOf body ns1:partOf Craig ns2:partOf IBM 8 - 14

- 9. Craig is "part of" IBM and finger is "part of" Craig, but finger is not "part of" IBM. ... back to the code So now we've created 3 RDFNodes in our Jena named model. pti/software wrote Shakespeare Hamlet Illustration 2: Three Disconnected RDFNodes in a Named Model If you're thinking something doesn't look right here, you're right. These nodes are disconnected. We haven't actually created a triple yet. We've just created two Resources and a Property7. Connect the Nodes In order to actually connect these values as a triple, we need to call this code: connect(subject, predicate, object); ... private Statement connect( Resource subject, Property predicate, Resource object) { model.add(subject, property, object); 7 You might not find yourself in a situation where you are creating properties at runtime. A triple store could be initialized with an Ontology model, which would itself explictly define the predicates and their usage. The triple store would then reference these pre-existing properties. However, there are valid situations where properties could created automatically. Text analytics on a large corpus and finding verbs (actions) that connect entities; the verbs could be modeled as predicates, and the results queried once complete. 9 - 14

- 10. return model.createStatement(subject, property, object); } Of course, you don't actually have to use my code above. But it is a lot easier to put a method around these two Jena methods (add and createStatement). And of course, all of this occurs within the context of the try/finally block discussed earlier. And then we get this: pti/software wrote Shakespeare Hamlet Illustration 3: A "Triple" It's perfectly valid to write resources to a model without connecting them to other resources. The connections may occur over time. 10 - 14

- 11. Triple Store vs Relational Store Relationships in a triple store can and should surprise you. You'll never design an Entity Relationship Diagram (ERD) and use a Relational Database (RDBMS) – and wake up one morning to find that there is a new relationship between table a and table b. This just doesn't happen. Primary keys, Foreign keys, Alternate keys – these are all the result of foresight and careful design of a well understood domain. The better the domain is understood, the better the relational database will be designed. If the structure of a relational database change, this can have a severe impact on the consumers of the data store. But a triple store is designed for change. If the domain is so large, and so dynamic, that it can never be fully understood, or fully embraced – then an ERD may not be the right choice. An Ontology and Triple Store may be better suited. As more data is added, relationships will begin to occur between nodes, and queries that execute against the triple store will return results where the relationships between entities in the result set may not have been anticipated. 11 - 14

- 12. Saving the Results Triples are held in a dataset which is either transient (in memory) or persisted (on disk). In the example we've just completed, the created triple was stored in Jena TDB. The first call we looked at: Dataset ds = TDBFactory.createDataset("/demo/model-01/"); actually creates a triple store on disk, at the location specified. If a triple store already existed at that location, this factory method would simply return the dataset for that triple store. Database setup doesn't get any easier than this8. And TDB is a serious triple store – suitable for enterprise applications that require scalability9 and performance. But what if we want to see what is actually in the triple store? Actually look at the data? We need the equivalent of a database dump. Fortunately, the Jena API makes it quite trivial to serialize model contents to file: model.write( new BufferedWriter( new OutputStreamWriter( new FileOutputStream( file, false ) ) ), "RDF/XML" ); Notice the use of the string literal "RDF/XML" as the second parameter of the write() method. There are multiple serialization types for RDF. Serialization Types Some of the more common ones are: 1. RDF/XML 2. RDF/XML-Abbrev 3. TTL (Turtle) 4. N-TRIPLE 5. N3 8 The setup for RDF support in DB2 is actually pretty simple. [REFERENCE SETUP PAGE]. And DB2-RDF out performs TDB in many respects [SHOW LINK]. 9 TDB supports ~1.7 billion triples. 12 - 14

- 13. TTL and N3 are among the easiest to read. RDF/XML is one of the original formats. If you cut your teeth on RDF by reading the RDF/XML format (still very common for online examples and tutorials) you may prefer that. But if you are new to this technology, you'll likely find TTL the most readable of all these formats. If we execute the above code on the triple we created, we'll end up with these serializations: RDF/XML <rdf:RDF xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#" xmlns:j.0="http://www.ibm.com/ontologies/2012/1/JenaDemo.owl#" > <rdf:Description rdf:about="http://www.ibm.com/ontologies/2012/1/JenaDemo.owl#Shakespeare"> <j.0:authorOf rdf:resource="http://www.ibm.com/ontologies/2012/1/JenaDemo.owl#Hamlet"/> </rdf:Description> </rdf:RDF> TTL <http://www.ibm.com/ontologies/2012/1/JenaDemo.owl#Shakespeare> <http://www.ibm.com/ontologies/2012/1/JenaDemo.owl#authorOf> <http://www.ibm.com/ontologies/2012/1/JenaDemo.owl#Hamlet> . N-TRIPLE <http://www.ibm.com/ontologies/2012/1/JenaDemo.owl#Shakespeare> <http://www.ibm.com/ontologies/2012/1/JenaDemo.owl#authorOf> <http://www.ibm.com/ontologies/2012/1/JenaDemo.owl#Hamlet> . Note that RDF/XML-ABBREV will show nesting (similar to an XML document). Since we only have a single triple in this demo, there's nothing to show for the serialization. 13 - 14

- 14. References 1. SPARQL Query Language for RDF. W3C Working Draft 21 July 2005. 22 February 2013 <http://www.w3.org/TR/2005/WD-rdf-sparql-query-20050721/#rdfDataset>. 2. Jena Users Group: 3. Jena/ARQ: Difference between Model, Graph and DataSets. August 8th, 2011. 4. Dokuklik, Yaghob, et al. Semantic Infrastructures. 2009. Charles University in Prague. Czech Republic. 14 - 14