Cuda materials

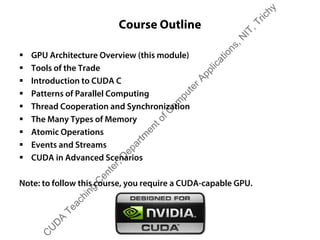

- 1. Course Outline ƒ GPU Architecture Overview (this module) ƒ Tools of the Trade ƒ Introduction to CUDA C ƒ Patterns of Parallel Computing ƒ Thread Cooperation and Synchronization ƒ The Many Types of Memory ƒ Atomic Operations ƒ Events and Streams ƒ CUDA in Advanced Scenarios Note: to follow this course, you require a CUDA-capable GPU.urse, you require a CUDA cap C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 2. History of GPU Computation No GPU CPU handled graphic output Dedicated GPU Separate graphics card (PCI, AGP) Programmable GPU Shaders C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 3. Shaders ƒ Small program that runs on the GPU ƒ Types † Vertex (used to calculate location of a vertex) † Pixel (used to calculate the color components of a single pixel) ƒ Shader languages † High Level Shader Language (HLSL, Microsoft DirectX) † OpenGL Shading Language (GLSL, OpenGL) † Both are C-like † Both are intended for graphics (i.e., not general-purpose) ƒ Pixel shaders used for math † Convert data to texture † Run texture through pixel shader † Get result texture and convert to data C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 4. Why GPGPU? ƒ General-Purpose Computation on GPUs ƒ Highly parallel architecture † Lots of concurrent threads of execution (SIMT) † Higher throughput compared to CPUs † Even taking into account many cores, hypethreading, SIMD † Thus more FLOPS (floating-point operations per second) ƒ Commodity hardware † Commonly available (mainly used by gamers) † Relatively cheap compared to custom solutions (e.g., FPGAs) ƒ Sensible programming model † Manufacturers realized GPGPU market potential † Graphics made optional † NVIDIA offers dedicated GPU platform “Tesla” † No output connections C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 5. GPGPU Frameworks ƒ Compute Unified Driver Architecture (CUDA) † Developed by NVIDIA Corporation † Extensions to programming languages (C/C++) † Wrappers for other languages/platforms (e.g., FORTRAN, PyCUDA, MATLAB) ƒ Open Computing Language (OpenCL) † Supported by many manufacturers (inc. NVIDIA) † The high-level way to perform computation on ATI devices ƒ C++ Accelerated Massive Programming (AMP) † C++ superset † A standard by Microsoft, part of MSVC++ † Supports both ATI and NVIDIA ƒ Other frameworks and cross-compilers † Alea, Aparapi, Brook, etc. C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 6. Graphics Processor Architecture ƒ Warning: NVIDIA terminology ahead ƒ Streaming Multiprocessor (SM) † Contains several CUDA cores † Can have >1 SM on card ƒ CUDA Core (a.k.a. Streaming Processor, Shader Unit) † # of cores per SM tied to compute capability ƒ Different types of memory † Means of access † Performance characteristics C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 7. Graphics Processor Architecture SM2 SM1 SP1 SP2 SP3 Device Memory Registers Shared Memory SP4 Constant Memory Texture Memory C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 8. Compute Capability ƒ A number indicating what the card can do † Current range: 1.0, 1.x, 2.x, 3.0, 3.5 ƒ Affects both hardware and API support † Number of CUDA cores per SM † Max # of 32-bit registers per SM † Max # of instructions per kernel † Support for double-precision ops † … and many other parameters ƒ Higher is better † See http://en.wikipedia.org/wiki/CUDA C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 9. Choosing a Graphics Card ƒ Look at performance specs (peak flops) † Pay attention to single vs. double † E.g. http://www.nvidia.com/object/tesla-servers.html ƒ Number of GPUs ƒ Compute capability/architecture † COTS cheaper than dedicated ƒ Memory size ƒ Ensure PSU/motherboard is good enough to handle the card(s) ƒ Can have >1 graphics card † YMMV (PCI saturation) ƒ Can mix architectures (e.g. NVIDIA+ATI) C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 10. Overview ƒ Windows, Mac OS, Linux † This course uses Windows/Visual Studio † This course focuses on desktop GPU development ƒ CUDA Toolkit † LLVM-based compiler † Headers & libraries † Documentation † Samples ƒ NSight † Visual Studio: plugin that allows debugging † Eclipse IDE ƒ http://developer.nvidia.com/cuda C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 12. What is NSight? ƒ Many developers, few GPUs † No need for each dev to have GPU ƒ Client-server architecture ƒ Server with GPU (target) † Runs Nsight service † Accepts connections from developers ƒ Clients with Visual Studio + NSight plugin (host) † Use NSight to connect to the server to run application and debug GPU code ƒ Caveats † Need to use VS Remote Debugging to debug CPU code † Need your own mechanism for syncing output Target GPU(s) NSight Service Visual Studio w/NSight C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 13. Overview ƒ Compilation Process ƒ Obligatory “Hello Cuda” demo ƒ Location Qualifiers ƒ Execution Model ƒ Grid and Block Dimensions ƒ Error Handling ƒ Device Introspection C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 14. NVidia Cuda Compiler (nvcc) ƒ nvcc is used to compile CUDA-specific code † Not a compiler! † Uses host C or C++ compiler (MSVC, GCC) † Some aspects are C++ specific ƒ Splits code into GPU and non-GPU parts † Host code passed to native compiler ƒ Accepts project-defined GPU-specific settings † E.g., compute capability ƒ Translates code written in CUDA C into PTX † Graphics driver turns PTX into binary code C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 15. NVCC Compilation Process __global__ void a() { } void b() { } __global__ void a() { } nvcc void b() { } Executable Host compiler (e.g. MSVC’s cl.exe) nvcc C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 16. Parallel Thread Execution (PTX) ƒ PTX is the ‘assembly language’ of CUDA † Similar to .NET IL or Java bytecode † Low-level GPU instructions ƒ Can be generated from a project ƒ Typically useful to compiler writers † E.g., GPU Ocelot https://code.google.com/p/gpuocelot/ ƒ Inline PTX (asm) C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 17. Location Qualifiers ƒ __global__ Defines a kernel. Runs on the GPU, called from the CPU. Executed with <<<dim3>>> arguments. ƒ __device__ Runs on the GPU, called from the GPU. † Can be used for variables too ƒ __host__ Runs on the CPU, called from the CPU. ƒ Qualifiers can be mixed † E.g. __host__ __device__ foo() † Code compiled for both CPU and GPU † Useful for testing C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 19. Execution Model ƒ Thread blocks are scheduled to run on available SMs ƒ Each SM executes one block at a time ƒ Thread block is divided into warps † Number of threads per warp depends on compute capability ƒ All warps are handled in parallel ƒ CUDA Warp Watch C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 20. Dimensions ƒ We defined execution as † <<<a,b>>> † A grid of a blocks of b threads each † The grid and each block are 1D structures ƒ In reality, these constructs are 3D † A 3-dimensional grid of 3-dimensional blocks † You can define (a×b×c) blocks of (x×y×z) threads † Can have 2D or 1D by setting extra dimensions to 1 ƒ Defined in dim3 structure † Simple container with x, y and z values. † Some constructors defined for C++ † Automatic conversion for <<<a,b>>> Æ (a,1,1) by (b,1,1) grid block thread C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 21. Thread Variables ƒ Execution parameters & current position ƒ blockIdx † Where we are in the grid ƒ gridDim † The size of the grid ƒ threadIdx † Position of current thread in thread block ƒ blockDim † Size of thread block ƒ Limitations † Grid & block sizes † # of threads C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 22. Error Handling ƒ CUDA does not throw † Silent failure ƒ Core functions return cudaError_t † Can check against cudaSuccess † Get description with cudaGetErrorString() ƒ Libraries may have different error types † E.g. cuRAND has curandStatus_t C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 23. Rules of the Game ƒ Different types of memory † Shared vs. private † Access speeds ƒ Data is in arrays † No parallel data structures † No other data structures † No auto-parallelization/vectorization compiler support † No CPU-type SIMD equivalent ƒ Compiler constraint † No C++11 support C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 24. Overview ƒ Data Access ƒ Map ƒ Gather ƒ Reduce ƒ Scan C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 25. Data Access ƒ A real problem! ƒ Thread space can be up to 6D † 3D grid of 3D thread blocks ƒ Input space typically 1D † 2D arrays are possible ƒ Need to map threads to inputs ƒ Some examples † 1 block, N threads Æ threadIdx.x † 1 block, MxN threads Æ threadIdx.y * blockDim.x + threadIdx.x † N blocks, M threads Æ blockIdx.x * gridDim.x + threadIdx.x † … and so on C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 30. Reduce in Practice ƒ Adding up N data elements ƒ Adding up N data elements ƒ Use 1 block of N/2 threads ƒ Each thread does x[i] += x[j]; ƒ At each step † # of threads halved † Distance (j-i) doubled ƒ x[0] is the result 1 2 3 4 5 6 7 8 3 7 11 153 0 7 1 11 2 155 3 10 260100 0 62626 1 3663636 0 C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 31. Scan 4 2 5 3 6 4 6 11 14 20 C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 32. Scan in Practice ƒ Similar to reduce 1 2 3 4 1 3 5 73 0 5 1 1 3 6 106 0 1 3 6 10 0 7 2 10 1 ƒ Require N-1 threads ƒ Step size keeps doubling ƒ Number of threads reduced by step size ƒ Each thread n does x[n+step] += x[n]; 5 3 9 2 000000 14 000 15 C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 33. Graphics Processor Architecture SM2 SM1 SP1 SP2 SP3 Device Memory Registers Shared Memory SP4 Constant Cache Texture CacheT C C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 34. Device Memory ƒ Grid scope (i.e., available to all threads in all blocks in the grid) ƒ Application lifetime (exists until app exits or explicitly deallocated) ƒ Dynamic † cudaMalloc() to allocate † Pass pointer to kernel † cudaMemcpy() to copy to/from host memory † cudaFree() to deallocate ƒ Static † Declare global variable as device __device__ int sum = 0; † Use freely within the kernel † Use cudaMemcpy[To/From]Symbol() to copy to/from host memory † No need to explicitly deallocate ƒ Slowest and most inefficient C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 35. Constant & Texture Memory ƒ Read-only: useful for lookup tables, model parameters, etc. ƒ Grid scope, Application lifetime ƒ Resides in device memory, but ƒ Cached in a constant memory cache ƒ Constrained by MAX_CONSTANT_MEMORY † Expect 64kb ƒ Similar operation to statically-defined device memory † Declare as __constant__ † Use freely within the kernel † Use cudaMemcpy[To/From]Symbol() to copy to/from host memory ƒ Very fast provided all threads read from the same location ƒ Used for kernel arguments ƒ Texture memory: similar to Constant, optimized for 2D access patterns C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 36. Shared Memory ƒ Block scope † Shared only within a thread block † Not shared between blocks ƒ Kernel lifetime ƒ Must be declared within the kernel function body ƒ Very fast C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 37. Register & Local Memory ƒ Memory can be allocated right within the kernel † Thread scope, Kernel lifetime ƒ Non-array memory † int tid = … † Stored in a register † Very fast ƒ Array memory † Stored in ‘local memory’ † Local memory is an abstraction, actually put in global memory † Thus, as slow as global memory C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 38. Summary Declaration Memory Scope Lifetime Slowdown int foo; Register Thread Kernel 1x int foo[10]; Local Thread Kernel 100x __shared__ int foo; Shared Block Kernel 1x __device__ int foo; Global Grid Application 100x __constant__ int foo; Constant Grid Application 1x C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 39. Thread Synchronization ƒ Threads can take different amounts of time to complete a part of a computation ƒ Sometimes, you want all threads to reach a particular point before continuing their work ƒ CUDA provides a thread barrier function __syncthreads() ƒ A thread that calls __syncthreads() waits for other threads to reach this line ƒ Then, all threads can continue executing barrier A B C C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 40. Restrictions ƒ __syncthreads() only synchronizes threads within a block ƒ A thread that calls __syncthreads() waits for other threads to reach this location ƒ All threads must reach __syncthreads() † if (x > 0.5) { __syncthreads(); // bad idea } ƒ Each call to __syncthreads() is unique † if (x > 0.5) { __syncthreads(); } else { __syncthreads(); // also a bad idea } C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 41. Branch Divergence ƒ Once a block is assigned to an SM, it is split into several warps ƒ All threads within a warp must execute the same instruction at the same time ƒ I.e., if and else branches cannot be executed concurrently ƒ Avoid branching if possible ƒ Ensure if statements cut on warp boundaries branch performance loss C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 42. Summary ƒ Threads are synchronized with __syncthreads() † Block scope † All threads must reach the barrier † Each __syncthreads() creates a unique barrier ƒ A block is split into warps † All threads in a warp execute same instruction † Branching leads to warp divergence (performance loss) † Avoid branching or ensure branch cases fall in different warps C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 43. Overview ƒ Why atomics? ƒ Atomic Functions ƒ Atomic Sum ƒ Monte Carlo Pi C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 44. Why Atomics? ƒ x++ is a read-modify-write operation † Read x into a register † Increment register value † Write register back into x † Effectively { temp = x; temp = temp+1; x = temp; } ƒ If two threads do x++ † Each thread has its own temp (say t1 and t2) † { t1 = x; t1 = t1+1; x = t1;} { t2 = x; t2 = t2+1; x = t2;} † Race condition: the thread that writes to x first wins † Whoever wins, x gets incremented only once C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 45. Atomic Functions ƒ Problem: many threads accessing the same memory location ƒ Atomic operations ensure that only one thread can access the location ƒ Grid scope! ƒ atomicOP(x,y) † t1 = *x; // read † t2 = t1 OP y; // modify † *a = t2; // write ƒ Atomics need to be configured † #include "sm_20_atomic_functions.h“ † C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 47. Summary ƒ Atomic operations ensure operations on a variable cannot be interrupted by a different thread ƒ CUDA supports several atomic operations † atomicAdd() † atomicOr() † atomicMin() † … and others ƒ Atomics incur a heavy performance penalty C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 48. Overview ƒ Events ƒ Event API ƒ Event example ƒ Pinned memory ƒ Streams ƒ Stream API ƒ Example (single stream) ƒ Example (multiple streams) C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 49. Events ƒ How to measure performance? ƒ Use OS timers † Too much noise ƒ Use profiler † Times only kernel duration + other invocations ƒ CUDA Events † Event = timestamp † Timestamp recorded on the GPU † Invoked from the CPU side C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 50. Event API ƒ cudaEvent_t † The event handle ƒ cudaEventCreate(&e) † Creates the event ƒ cudaEventRecord(e, 0) † Records the event, i.e. timestamp † Second param is the stream to which to record ƒ cudaEventSynchronize(e) † CPU and GPU are async, can be doing things in parallel † cudaEventSynchronize() blocks all instruction processing until the GPU has reached the event ƒ cudaEventElapsedTime(&f, start, stop) † Computes elapsed time (msec) between start and stop, stored as float C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 51. Pinned Memory ƒ CPU memory is pageable † Can be swapped to disk ƒ Pinned (page-locked) stays in place ƒ Performance advantage when copying to/from GPU ƒ Use cudaHostAlloc() instead of malloc() or new ƒ Use cudaFreeHost() to deallocate ƒ Cannot be swapped out † Must have enough † Proactively deallocate C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 52. Streams ƒ Remember cudaEventRecord(event, stream)? ƒ A CUDA stream is a queue of GPU operations † Kernel launch † Memory copy ƒ Streams allow a form of task-based parallelism † Performance improvement ƒ To leverage streams you need device overlap support † GPU_OVERLAP C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 53. Stream API ƒ cudaStream_t ƒ cudaStreamCreate(&stream) ƒ kernel<<<blocks,threads,shared,stream>>> ƒ cudaMemcpyAsync() † Must use pinned memory! ƒ stream parameter ƒ cudaStreamSynchronize(stream) C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy

- 54. Summary ƒ CUDA events let you time your code on the GPU ƒ Pinned memory speeds up data transfers to/from device ƒ CUDA streams allow you to queue up operations asynchronously † Lets you do different things in parallel on the GPU † Use of pinned memory is required C U D A Teaching C enter,D epartm entofC om puterApplications,N IT,Trichy