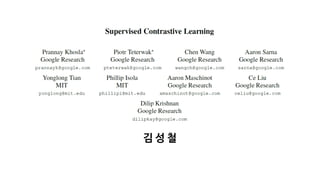

Supervised Constrastive Learning

- 1. 김 성 철

- 2. Contents 1. Introduction 2. Related Work 3. Method 4. Experiments 5. Discussion 2 https://github.com/rlatjcj/Paper-code-review/tree/master/%5BArxiv%202020%5D%20Supervised%20Contrastive%20Learning

- 3. Introduction • Cross-entropy • Supervised learning에서 많이 사용됨 • Label distribution과 empirical distribution의 KL-divergence로 생각할 수 있음 • 개선 : label smoothing, self-distillation, mixup, … • 같은 클래스는 가깝게, 다른 클래스는 멀게 하는 supervised training loss • Self-supervised learning에서 좋은 성능을 보이는 metric learning과 연관이 있는 contrastive objective functions 3

- 4. Introduction • Supervised contrastive leaning • Multiple positives를 적용한 contrastive loss로 full supervised setting에서 contrastive learning 진행 • Cross-entropy와 비교했을 때 top-1 accuracy와 robustness에서 SOTA • Cross-entropy보다 hyperparameter 범위에 덜 민감 • Hard positive, negative의 학습을 촉진하는 gradient • Single positive, negative가 사용되었을 때 triplet loss와 연관성 4

- 5. Related Work • Self-supervised representation learning + Metric learning + Supervised learning • Cross-entropy loss는 deep network를 학습하기 위한 좋은 loss function • 하지만, 왜 target label이 정답이 되어야 하는지 명확하지 않음 • 더 좋은 target label vector가 존재함을 증명 (S. Yang et al., 2015) • Cross-entropy loss의 다른 단점 • Sensitivity to noise labels (Z. Zhang et al., 2018., S. Sukhbaatar et al., 2014.) • Adversarial examples (G Elsayed et al., 2018., K. Nar et al., 2019.) • Poor margins (K. Cao et al., 2019.) • 다른 loss제안, reference label distribution을 바꾸는 것이 더 효과적 • Label smoothing, Mixup, CutMix, Knowledge Distillation 5 S. Yang et al., 2015. K. Cao et al., 2019.

- 6. Related Work • 최근에 self-supervised representation learning이 각광받는 중 • Language domain • Pre-trained embedding (BERT, Xlnet, …) • Image domain • Embedding을 배우기 위해 사용 (e.g. 가려진 signal부분을 가려지지 않은 부분으로 예측) • Self-supervised representation learning은 contrastive learning으로 바뀌는 중 • Noise contrastive estimation, N-pair loss • 학습할 때 deep network의 마지막 레이어에 loss를 적용 • 테스트 시 downstream transfer task, fine tuning, direct retrieval task를 위해 이전 레이어를 활용 6

- 7. Related Work • Contrastive learning은 metric learning과 triplet loss와 연관있음 • 공통점은 powerful representation을 학습 • Triplet loss와 contrastive loss의 차이점 • Data point당 positive, negative pair 수 • Triplet loss : one positive, one negative • Supervised metric learning : positive in same class, negative in different class (hard negative mining) • Self-supervised contrastive loss : one positive pair selected using either co-occurrence or using data augmentation • Supervised contrastive와 가장 유사한 것은 soft-nearest neighbor loss • 공통점 : embedding을 normalize, euclidean distance를 inner product로 교체 • 개선 : data augmentation, disposable contrastive head, two-stage training 7

- 8. Method • Representation Learning Framework • Self-supervised contrastive learning을 사용한 Contrastive Multiview Coding, SimCLR과 구조적으로 유사 • 각 input image에 대해 두 개의 randomly augmented image 생성 • First stage : random crop + resize to the image’s native resolution • Second stage : AutoAugment, RandAugment, SimAugment • Encoder network(𝐸(⋅)) : augmented image 𝒙 를 representation vector 𝒓 = 𝐸 𝒙 ∈ ℛ 𝐷 𝐸 로 나타냄 • E.g. ResNet50의 마지막 pooling layer(𝐷 𝐸)를 representation vector로 사용, unit hypersphere로 항상 normalize • Projection network (𝑃(⋅)) : normalized representation vector 𝒓 을 contrastive loss 계산에 적합한 vector 𝒛 = 𝑃 𝒓 ∈ ℛ 𝐷 𝑃로 나타냄 • E.g. 𝐷 𝐸의 output vector size를 가진 multi-layer perceptron 사용, unit hypersphere로 항상 normalize • 학습이 완료되면, projection network를 없애고 single linear layer로 교체 8

- 9. Method • Contrastive Losses: Self-supervised and Supervised • Contrastive loss의 이점은 유지한 채 labeled data를 효과적으로 사용하는 contrastive loss • Randomly sampling으로 N개의 image/label pairs 생성 ( 𝑥 𝑘, 𝑦 𝑘 𝑘=1…𝑁) • 실질적으로 학습에 사용되는 minibatch는 2N pairs ( 𝑥 𝑘, 𝑦 𝑘 𝑘=1…2𝑁) • 𝑥2𝑘, 𝑥2𝑘−1 : two random augmentation of 𝑥 𝑘 𝑘 = 1 … 𝑁 • 𝑦2𝑘−1 = 𝑦2𝑘 = 𝑦 𝑘 9 https://towardsdatascience.com/exploring-simclr-a-simple-framework-for-contrastive-learning-of-visual-representations-158c30601e7e

- 10. Method • Contrastive Losses: Self-supervised and Supervised • Self-Supervised Contrastive Loss • 𝑖 ∈ 1 … 2𝑁 : the index of an arbitrary augmented image • 𝑗 𝑖 : the index of the other augmented image originating from the same source image • 𝑧𝑙 = 𝑃 𝐸 𝒙𝒍 • 𝑖 : anchor / 𝑗(𝑖) : positive / 𝑘 𝑘 = 1 … 2𝑁, 𝑘 ∉ 𝑖, 𝑗 : negatives • 𝑧𝑖 ⋅ 𝑧𝑗 𝑖 : an inner product between the normalized vectors • Similar view는 neighboring representation, dissimilar view는 non-neighbor representation으로 학습 10

- 11. Method • Supervised Contrastive Loss • Supervised learning은 같은 클래스에 속한 샘플이 하나보다 많기 때문에, Eq.2의 contrastive loss 사용할 수 없음 → 임의의 positive를 사용하기 위한 loss제안 • 𝑁𝑦 𝑖 : the total number of images in the minibatch that have the same label, 𝑦𝑖, as the anchor, 𝑖 • Generalization to an arbitrary number of positives • Loss는 encoder가 같은 클래스 내 모든 데이터를 Eq.2보다 더 robust clustering으로 가깝게 표현하도록 함 • Contrastive power increases with more negatives • 분모에 positive는 물론 negative를 많이 추가하여 positive와 멀리 떨어지도록 함 11

- 12. Method • Supervised Contrastive Loss Gradient Properties • Supervised contrastive loss가 weak positive, negative보다 hard positive, negative에 초점을 둔 학습방법임을 증명 • Projection network 마지막에 normalization layer 추가 → gradient를 inner product할 때 조절 • 𝑤 : the projection network output immediately prior to normalize (e.g. 𝑧 = 𝑤/| 𝑤 |) 12 positives negatives

- 13. Method • Supervised Contrastive Loss Gradient Properties (Cont.) • Easy positive, negative : small gradient / Hard positive, negative : large gradient • Easy positive : 𝑧𝑖 ⋅ 𝑧𝑗 ≈ 1, 𝑃𝑖𝑗 is large • Hard positive : 𝑧𝑖 ⋅ 𝑧𝑗 ≈ 0, 𝑃𝑖𝑗 is moderate → weak positive는 ||∇ 𝑧 𝑖 ℒ𝑖,𝑝𝑜𝑠 𝑠𝑢𝑝 || 가 작고, hard positive는 큼 • Weak negative는 𝑧𝑖 ⋅ 𝑧𝑗 ≈ −1, hard negative는 𝑧𝑖 ⋅ 𝑧𝑗 ≈ 0이므로 𝑧 𝑘 − 𝑧𝑖 ⋅ 𝑧 𝑘 ⋅ 𝑧𝑖 ⋅ 𝑃𝑖𝑘에 영향을 미침 • ( 𝑧𝑖 ⋅ 𝑧𝑙 ⋅ 𝑧𝑙 − 𝑧𝑙)은 normalization layer가 projection network 마지막 에 붙어있을 때만 그 역할을 할 수 있음 → network에 normalization을 사용해야함! 13

- 14. Method • Connections to Triplet Loss • Contrastive learning은 triplet loss와 밀접한 연관이 있음 • Triplet loss는 positive와 negative를 각각 하나씩 사용 → Positive와 negative가 각각 하나 씩인 contrastive loss로 생각할 수 있음 • Anchor, positive의 representation이 anchor, negative보다 더 잘 맞춰졌다고 가정 (𝑧 𝑎 ⋅ 𝑧 𝑝 ≫ 𝑧 𝑎 ⋅ 𝑧 𝑛) • Margin 𝛼 = 2𝜏인 triplet loss와 동일한 형태 • 물론 성능이 더 좋고 계산량도 적음 14

- 15. Experiments • ImageNet Classification Accuracy • Supervised contrastive loss가 ImageNet SOTA기록 15

- 16. Experiments • Robustness to Image Corruptions and Calibration • ImageNet-C를 이용한 robustness에서도 SOTA기록 16

- 17. Experiments • Hyperparameter Stability • Hyperparameter의 변화에서도 안정된 성능 유지 • Different optimizer, data augmentation, learning rates • Optimizer와 augmentation의 변화에서 작은 분산을 보임 • Hypersphere의 smoother geometry 덕분이라고 추측 17

- 18. Experiments • Effect of Number of Positives • Positive의 수의 영향에 대해 ablation study • Positive의 수를 늘릴수록 성능이 좋아짐 • Positive의 수를 늘릴수록 computational cost가 높아지는 trade-off • Positive에는 같은 데이터지만 다른 augmentation한 경우도 포함되고, 나머지는 같은 클래스지만 다른 샘플 • Self-supervised learning은 1positive 18

- 19. Experiments • Training Details • Epochs : 700 (pre-training stage) • 각 스텝마다 cross-entropy보다 50%정도 느렸는데, 이는 미니배치 내 모든 요소들의 cross-product를 계산해야 했기 때문 • Batch size : ~8192 (2048도 충분) • ResNet50은 8192, ResNet200은 2048 (큰 네트워크일수록 작은 batch size가 필요) • 고정된 batch size에서 cross-entropy와 비슷한 성능을 갖는데 larger learning rate와 적은 epoch로 가능 • Temperature : 𝜏 = 0.07 • Temperature은 작을수록 좋은 성능을 보이지만, numerical stability 문제로 학습이 어려울 수 있음 • AutoAugment가 Supervised Contrastive와 Cross Entropy 모두에서 좋은 성능을 보임 • LARS, RMSProp, SGD with momentum을 different permutation으로 initial pre-training step, dense layer 학습 • ResNet을 cross entropy로 학습할 땐 momentum optimizer, supervised contrastive loss로 학습할 땐 pre-training에는 LARS, dense layer 학습은 RMSProp 19

- 20. 감 사 합 니 다 20