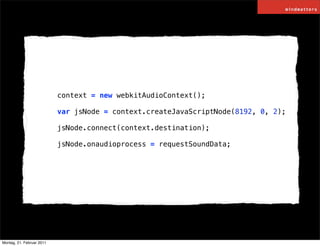

Das Dokument behandelt die Implementierung von Echtzeitaudio über Web-APIs und stellt verschiedene Beispiele und Code-Snippets vor. Es wird der Einsatz von Audio-Daten-APIs sowie Web Audio APIs beschrieben, um Audio-Daten zu erzeugen und zu verarbeiten. Zudem werden mögliche Anwendungen in Musik und Spielen angesprochen.

![<!DOCTYPE html>

<html>

<head>

<title>JavaScript Audio Write Example</title>

</head>

<body>

<input type="text" size="4" id="freq" value="440"><label for="hz">Hz</label>

<button onclick="start()">play</button>

<button onclick="stop()">stop</button>

<script type="text/javascript">

function AudioDataDestination(sampleRate, readFn) {

// Initialize the audio output.

var audio = new Audio();

audio.mozSetup(1, sampleRate);

var currentWritePosition = 0;

var prebufferSize = sampleRate / 2; // buffer 500ms

var tail = null;

// The function called with regular interval to populate

// the audio output buffer.

setInterval(function() {

var written;

// Check if some data was not written in previous attempts.

if(tail) {

written = audio.mozWriteAudio(tail);

currentWritePosition += written;

if(written < tail.length) {

// Not all the data was written, saving the tail...

tail = tail.slice(written);

return; // ... and exit the function.

}

tail = null;

}

// Check if we need add some data to the audio output.

var currentPosition = audio.mozCurrentSampleOffset();

var available = currentPosition + prebufferSize - currentWritePosition;

if(available > 0) {

// Request some sound data from the callback function.

var soundData = new Float32Array(available);

readFn(soundData);

// Writting the data.

written = audio.mozWriteAudio(soundData);

if(written < soundData.length) {

// Not all the data was written, saving the tail.

tail = soundData.slice(written);

}

currentWritePosition += written;

}

}, 100);

}

// Control and generate the sound.

var frequency = 0, currentSoundSample;

var sampleRate = 44100;

function requestSoundData(soundData) {

if (!frequency) {

return; // no sound selected

}

var k = 2* Math.PI * frequency / sampleRate;

for (var i=0, size=soundData.length; i<size; i++) {

soundData[i] = Math.sin(k * currentSoundSample++);

}

}

var audioDestination = new AudioDataDestination(sampleRate, requestSoundData);

function start() {

currentSoundSample = 0;

frequency = parseFloat(document.getElementById("freq").value);

}

function stop() {

frequency = 0;

}

</script>

Montag, 21. Februar 2011](https://image.slidesharecdn.com/2011-02-21-hhjs-web-audio-110221162050-phpapp02/85/realtime-audio-on-ze-web-hhjs-21-320.jpg)

![[...]

var audio = new Audio();

audio.mozSetup(1, sampleRate);

[...]

written = audio.mozWriteAudio(tail);

[...]

Montag, 21. Februar 2011](https://image.slidesharecdn.com/2011-02-21-hhjs-web-audio-110221162050-phpapp02/85/realtime-audio-on-ze-web-hhjs-22-320.jpg)

![soundbridge = SoundBridge(2, 44100, '..');

[...]

soundbridge.setCallback(calc);

soundbridge.play();

}, 1000);

Montag, 21. Februar 2011](https://image.slidesharecdn.com/2011-02-21-hhjs-web-audio-110221162050-phpapp02/85/realtime-audio-on-ze-web-hhjs-32-320.jpg)