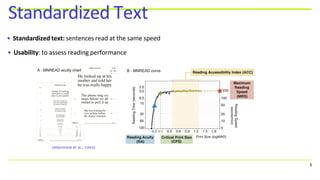

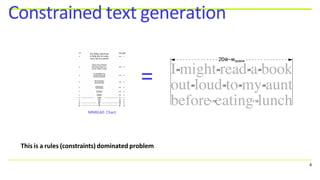

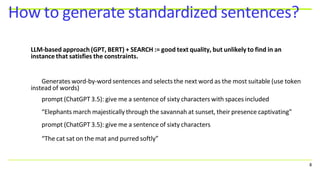

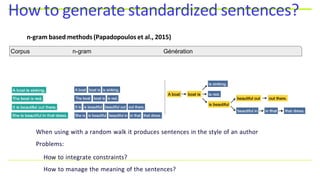

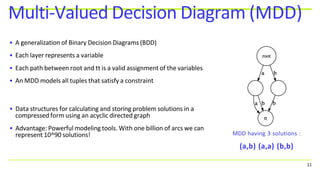

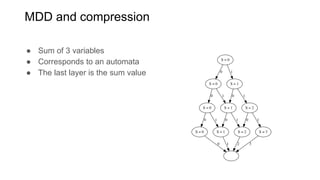

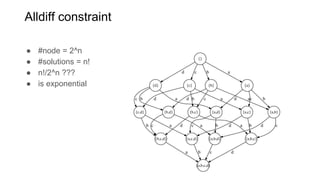

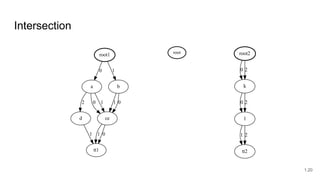

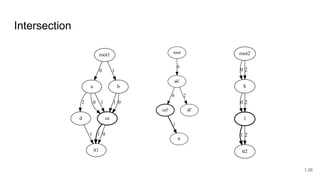

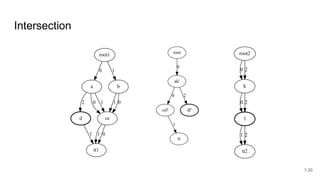

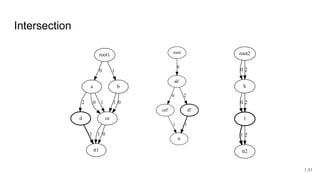

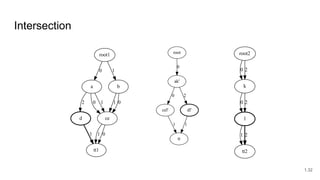

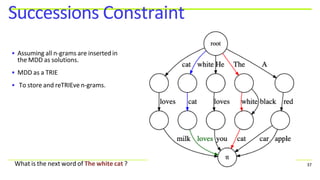

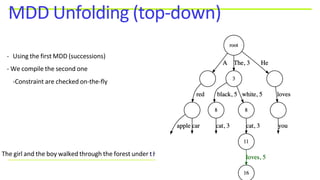

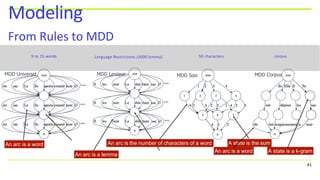

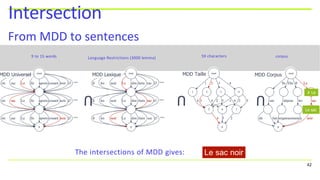

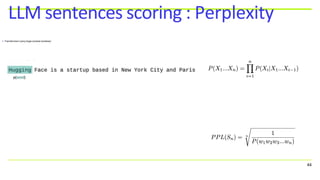

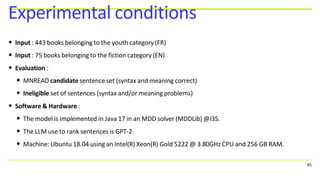

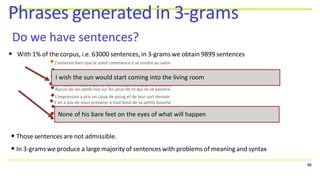

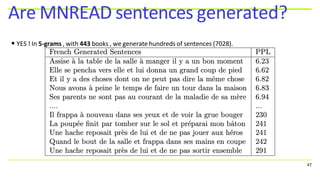

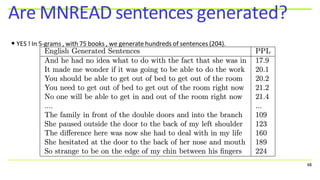

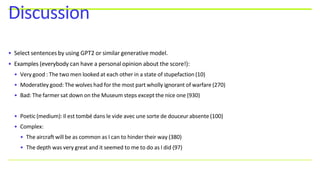

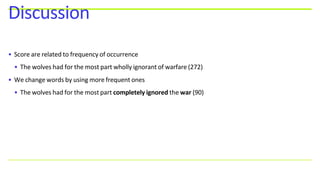

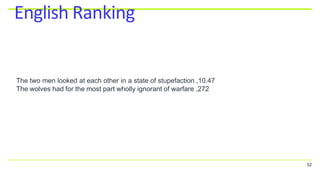

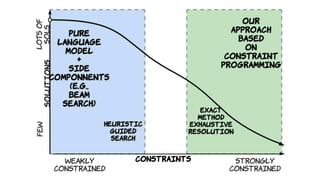

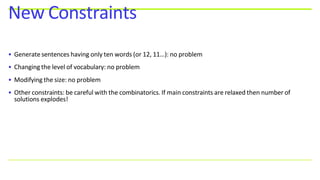

The document discusses a new approach to constrained text generation aimed at assessing reading performance by creating standardized sentences using various methods including constraint programming and machine learning models. It outlines the challenges in generating a sufficient number of sentences that adhere to specific linguistic constraints, as well as the development of multi-valued decision diagrams (MDDs) to efficiently represent and manipulate these constraints. The findings illustrate the efficacy of using LLMs (like GPT-2) for ranking generated sentences and highlight potential applications for similar tasks in different languages.

![• Promising method: more suitable than generic methods for handling constraints (e.g., GPT, Bert) and

more flexible than the ad-hoc method of Mansfield et al [3].

• Advantages: modularity (easy to add and/or remove rules), constraints taken into account at generation,

potentially applicable to other languages

• Perspectives: a perplexity constraint

55

Conclusion](https://image.slidesharecdn.com/kolloqiumjcrginslides-241112095804-39f979a5/85/Constrained-text-generation-to-measure-reading-performance-A-new-approach-based-on-constraint-programming-54-320.jpg)