Web scraping with nutch solr part 2

•Als ODP, PDF herunterladen•

4 gefällt mir•3,882 views

Part 2 of a three part presentation showing how nutch and solr may be used to crawl the web, extract data and prepare it for loading into a data warehouse.

Melden

Teilen

Melden

Teilen

Empfohlen

Weitere ähnliche Inhalte

Was ist angesagt?

Was ist angesagt? (20)

Nutch + Hadoop scaled, for crawling protected web sites (hint: Selenium)

Nutch + Hadoop scaled, for crawling protected web sites (hint: Selenium)

Get started with Developing Frameworks in Go on Apache Mesos

Get started with Developing Frameworks in Go on Apache Mesos

You know, for search. Querying 24 Billion Documents in 900ms

You know, for search. Querying 24 Billion Documents in 900ms

Elasticsearch 1.x Cluster Installation (VirtualBox)

Elasticsearch 1.x Cluster Installation (VirtualBox)

Ähnlich wie Web scraping with nutch solr part 2

Ähnlich wie Web scraping with nutch solr part 2 (20)

Getting started with the Lupus Nuxt.js Drupal Stack

Getting started with the Lupus Nuxt.js Drupal Stack

MongoDB – Sharded cluster tutorial - Percona Europe 2017

MongoDB – Sharded cluster tutorial - Percona Europe 2017

Performance Optimization using Caching | Swatantra Kumar

Performance Optimization using Caching | Swatantra Kumar

Nagios Conference 2014 - Eric Mislivec - Getting Started With Nagios Core

Nagios Conference 2014 - Eric Mislivec - Getting Started With Nagios Core

Introduction to Drupal - Installation, Anatomy, Terminologies

Introduction to Drupal - Installation, Anatomy, Terminologies

Mehr von Mike Frampton

Mehr von Mike Frampton (20)

Kürzlich hochgeladen

+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHA...

+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHA...?#DUbAI#??##{{(☎️+971_581248768%)**%*]'#abortion pills for sale in dubai@

Kürzlich hochgeladen (20)

Axa Assurance Maroc - Insurer Innovation Award 2024

Axa Assurance Maroc - Insurer Innovation Award 2024

Automating Google Workspace (GWS) & more with Apps Script

Automating Google Workspace (GWS) & more with Apps Script

ProductAnonymous-April2024-WinProductDiscovery-MelissaKlemke

ProductAnonymous-April2024-WinProductDiscovery-MelissaKlemke

2024: Domino Containers - The Next Step. News from the Domino Container commu...

2024: Domino Containers - The Next Step. News from the Domino Container commu...

Repurposing LNG terminals for Hydrogen Ammonia: Feasibility and Cost Saving

Repurposing LNG terminals for Hydrogen Ammonia: Feasibility and Cost Saving

Top 5 Benefits OF Using Muvi Live Paywall For Live Streams

Top 5 Benefits OF Using Muvi Live Paywall For Live Streams

+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHA...

+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHA...

Why Teams call analytics are critical to your entire business

Why Teams call analytics are critical to your entire business

Top 10 Most Downloaded Games on Play Store in 2024

Top 10 Most Downloaded Games on Play Store in 2024

TrustArc Webinar - Unlock the Power of AI-Driven Data Discovery

TrustArc Webinar - Unlock the Power of AI-Driven Data Discovery

The 7 Things I Know About Cyber Security After 25 Years | April 2024

The 7 Things I Know About Cyber Security After 25 Years | April 2024

Cloud Frontiers: A Deep Dive into Serverless Spatial Data and FME

Cloud Frontiers: A Deep Dive into Serverless Spatial Data and FME

How to Troubleshoot Apps for the Modern Connected Worker

How to Troubleshoot Apps for the Modern Connected Worker

HTML Injection Attacks: Impact and Mitigation Strategies

HTML Injection Attacks: Impact and Mitigation Strategies

Powerful Google developer tools for immediate impact! (2023-24 C)

Powerful Google developer tools for immediate impact! (2023-24 C)

Web scraping with nutch solr part 2

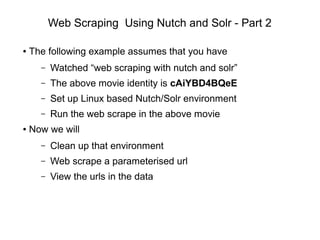

- 1. Web Scraping Using Nutch and Solr - Part 2 ● The following example assumes that you have – Watched “web scraping with nutch and solr” – The above movie identity is cAiYBD4BQeE – Set up Linux based Nutch/Solr environment – Run the web scrape in the above movie ● Now we will – Clean up that environment – Web scrape a parameterised url – View the urls in the data

- 2. Empty Nutch Database ● Clean up the Nutch crawl database – Previously used apache-nutch-1.6/nutch_start.sh – This contained -dir crawl option – This created apache-nutch-1.6/crawl directory – Which contains our Nutch data ● Clean this as – cd apache-nutch-1.6; rm -rf crawl ● Only because it contained dummy data ! ● Next run of script will create dir again

- 3. Empty Solr Database ● Clean Solr database via a url – Book mark this url – Only use it if you need to empty your data ● Run the following ( with solr server running ) – http://localhost:8983/solr/update?commit=true -d '<delete><query>*:*</query></delete>'

- 4. Set up Nutch ● Now we will do something more complex ● Web scrape a url that has parameters i.e. – http://<site>/<function>?var1=val1&var2=val2 ● This web scrape will – Have extra url characters '?=&' – Need greater search depth – Need better url filtering ● Remember that you need to get permission to scrape a third party web site

- 5. Nutch Configuration ● Change seed file for Nutch ● apache-nutch-1.6/urls/seed.txt ● In this instance I will use a url of the form – http://somesite.co.nz/Search?DateRange=7&industry=62 – ( this is not a real url – just an example ) ● Change conf regex-urlfilter.txt entry i.e. – # skip URLs containing certain characters – -[*!@] – # accept anything else – +^http://([a-z0-9]*.)*somesite.co.nz/Search ● This will only consider some site Search urls

- 6. Run Nutch ● Now run nutch using start script – cd apache-nutch-1.6 ; ./nutch_start.bash ● Monitor for errors in solr admin log window ● The Nutch crawl should end with – crawl finished: crawl

- 7. Checking Data ● Data should have been indexed in Solr ● In Solr Admin window – Set 'Core Selector' = collection1 – Click 'Query' – In Query window set fl field = url – Click Execute Query ● The result ( next ) shows the filtered list of urls in Solr

- 9. Results ● Congratulations you have completed your second crawl – With parameterised urls – More complex url filtering – With a Solr Query search

- 10. Contact Us ● Feel free to contact us at – www.semtech-solutions.co.nz – info@semtech-solutions.co.nz ● We offer IT project consultancy ● We are happy to hear about your problems ● You can just pay for those hours that you need ● To solve your problems