Data Science Job ready #DataScienceInterview Question and Answers 2022 | #DataScienceProjects

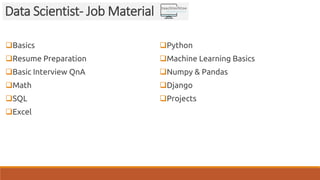

- 1. Basics Resume Preparation Basic Interview QnA Math SQL Excel Python Machine Learning Basics Numpy & Pandas Django Projects Data Scientist- Job Material

- 2. Basics: Data Scientist- Job Material Data science is a deep study of the massive amount of data, which involves extracting meaningful insights from raw, structured, and unstructured data that is processed using the scientific method, different technologies, and algorithms. Data Science is about data gathering, analysis and decision-making. Data Science is about finding patterns in data, through analysis, and make future predictions. In short, we can say that data science is all about: • Asking the correct questions and analyzing the raw data. • Modeling the data using various complex and efficient algorithms. • Visualizing the data to get a better perspective. • Understanding the data to make better decisions and finding the final result.

- 3. Basics: Data Scientist- Job Material

- 4. Basics: Data Scientist- Job Material Example: Let suppose we want to travel from station A to station B by car. Now, we need to take some decisions such as which route will be the best route to reach faster at the location, in which route there will be no traffic jam, and which will be cost-effective. All these decision factors will act as input data, and we will get an appropriate answer from these decisions, so this analysis of data is called the data analysis, which is a part of data science.

- 5. Basics: Data Scientist- Job Material With the help of data science technology, we can convert the massive amount of raw and unstructured data into meaningful insights. Data science technology is opting by various companies, whether it is a big brand or a startup. Google, Amazon, Netflix, etc. which handle the huge amount of data, are using data science algorithms for better customer experience. Data science is working for automating transportation such as creating a self- driving car, which is the future of transportation. Data science can help in different predictions such as various survey, elections, flight ticket confirmation, etc.

- 6. Basics: Data Scientist- Job Material Types of Data Science Job: If you learn data science, then you get the opportunity to find the various exciting job roles in this domain. The main job roles are given below: • Data Scientist • Data Analyst • Machine learning expert • Data engineer • Data Architect • Data Administrator • Business Analyst • Business Intelligence Manager Below is the explanation of some critical job titles of data science. 1. Data Analyst: Data analyst is an individual, who performs mining of huge amount of data, models the data, looks for patterns, relationship, trends, and so on. At the end of the day, he comes up with visualization and reporting for analyzing the data for decision making and problem-solving process. Skill required: For becoming a data analyst, you must get a good background in mathematics, business intelligence, data mining, and basic knowledge of statistics. You should also be familiar with some computer languages and tools such as MATLAB, Python, SQL, Hive, Pig, Excel, SAS, R, JS, Spark, etc.

- 7. Basics: Data Scientist- Job Material 2. Machine Learning Expert: The machine learning expert is the one who works with various machine learning algorithms used in data science such as regression, clustering, classification, decision tree, random forest, etc. Skill Required: Computer programming languages such as Python, C++, R, Java, and Hadoop. You should also have an understanding of various algorithms, problem- solving analytical skill, probability, and statistics. 3. Data Scientist: A data scientist is a professional who works with an enormous amount of data to come up with compelling business insights through the deployment of various tools, techniques, methodologies, algorithms, etc. Skill required: To become a data scientist, one should have technical language skills such as R, SAS, SQL, Python, Hive, Pig, Apache spark, MATLAB. Data scientists must have an understanding of Statistics, Mathematics, visualization, and communication skills. Tools for Data Science Following are some tools required for data science: • Data Analysis tools: R, Python, Statistics, SAS, Jupyter, R Studio, MATLAB, Excel, RapidMiner. • Data Warehousing: ETL, SQL, Hadoop, Informatica/Talend, AWS Redshift • Data Visualization tools: R, Jupyter, Tableau, Cognos. • Machine learning tools: Spark, Mahout, Azure ML studio.

- 8. Basics: Data Scientist- Job Material Prerequisite for Data Science Non-Technical Prerequisite: Curiosity: To learn data science, one must have curiosities. When you have curiosity and ask various questions, then you can understand the business problem easily. Critical Thinking: It is also required for a data scientist so that you can find multiple new ways to solve the problem with efficiency. Communication skills: Communication skills are most important for a data scientist because after solving a business problem, you need to communicate it with the team. Technical Prerequisite: Machine learning: To understand data science, one needs to understand the concept of machine learning. Data science uses machine learning algorithms to solve various problems. Mathematical modeling: Mathematical modeling is required to make fast mathematical calculations and predictions from the available data. Statistics: Basic understanding of statistics is required, such as mean, median, or standard deviation. It is needed to extract knowledge and obtain better results from the data. Computer programming: For data science, knowledge of at least one programming language is required. R, Python, Spark are some required computer programming languages for data science. Databases: The depth understanding of Databases such as SQL, is essential for data science to get the data and to work with data.

- 9. Basics: Data Scientist- Job Material How to solve a problem in Data Science using Machine learning algorithms? Now, let's understand what are the most common types of problems occurred in data science and what is the approach to solving the problems. So in data science, problems are solved using algorithms, and below is the diagram representation for applicable algorithms for possible questions:

- 10. Basics: Data Scientist- Job Material Is this A or B? : We can refer to this type of problem which has only two fixed solutions such as Yes or No, 1 or 0, may or may not. And this type of problems can be solved using classification algorithms. Is this different? : We can refer to this type of question which belongs to various patterns, and we need to find odd from them. Such type of problems can be solved using Anomaly Detection Algorithms. How much or how many? The other type of problem occurs which ask for numerical values or figures such as what is the time today, what will be the temperature today, can be solved using regression algorithms. How is this organized? Now if you have a problem which needs to deal with the organization of data, then it can be solved using clustering algorithms. Clustering algorithm organizes and groups the data based on features, colors, or other common characteristics.

- 11. Basics: Data Scientist- Job Material Data Science life Cycle • Here is how a Data Scientist works: • Ask the right questions - To understand the business problem. • Explore and collect data - From database, web logs, customer feedback, etc. • Extract the data - Transform the data to a standardized format. • Clean the data - Remove erroneous values from the data. • Find and replace missing values - Check for missing values and replace them with a suitable value (e.g. an average value). • Normalize data - Scale the values in a practical range (e.g. 140 cm is smaller than 1,8 m. However, the number 140 is larger than 1,8. - so scaling is important). • Analyze data, find patterns and make future predictions. • Represent the result - Present the result with useful insights in a way the "company" can understand.

- 12. Basics: Data Scientist- Job Material The main phases of data science life cycle are given below: 1. Discovery: The first phase is discovery, which involves asking the right questions. When you start any data science project, you need to determine what are the basic requirements, priorities, and project budget. In this phase, we need to determine all the requirements of the project such as the number of people, technology, time, data, an end goal, and then we can frame the business problem on first hypothesis level. 2. Data preparation: Data preparation is also known as Data Munging. In this phase, we need to perform the following tasks: • Data cleaning • Data Reduction • Data integration • Data transformation, After performing all the above tasks, we can easily use this data for our further processes. 3. Model Planning: In this phase, we need to determine the various methods and techniques to establish the relation between input variables. We will apply Exploratory data analytics(EDA) by using various statistical formula and visualization tools to understand the relations between variable and to see what data can inform us. Common tools used for model planning are: SQL Analysis Services • R • SAS • Python

- 13. Basics: Data Scientist- Job Material 4. Model-building: In this phase, the process of model building starts. We will create datasets for training and testing purpose. We will apply different techniques such as association, classification, and clustering, to build the model. Following are some common Model building tools: o SAS Enterprise Miner o WEKA o SPCS Modeler o MATLAB 5. Operationalize: In this phase, we will deliver the final reports of the project, along with briefings, code, and technical documents. This phase provides you a clear overview of complete project performance and other components on a small scale before the full deployment. 6. Communicate results: In this phase, we will check if we reach the goal, which we have set on the initial phase. We will communicate the findings and final result with the business team.

- 14. Basics: Data Scientist- Job Material Applications of Data Science: Image recognition and speech recognition: Data science is currently using for Image and speech recognition. When you upload an image on Facebook and start getting the suggestion to tag to your friends. This automatic tagging suggestion uses image recognition algorithm, which is part of data science. When you say something using, "Ok Google, Siri, Cortana", etc., and these devices respond as per voice control, so this is possible with speech recognition algorithm. Gaming world: In the gaming world, the use of Machine learning algorithms is increasing day by day. EA Sports, Sony, Nintendo, are widely using data science for enhancing user experience. Internet search: When we want to search for something on the internet, then we use different types of search engines such as Google, Yahoo, Bing, Ask, etc. All these search engines use the data science technology to make the search experience better, and you can get a search result with a fraction of seconds.

- 15. Basics: Data Scientist- Job Material Transport: Transport industries also using data science technology to create self-driving cars. With self-driving cars, it will be easy to reduce the number of road accidents. Healthcare: In the healthcare sector, data science is providing lots of benefits. Data science is being used for tumor detection, drug discovery, medical image analysis, virtual medical bots, etc. Recommendation systems: Most of the companies, such as Amazon, Netflix, Google Play, etc., are using data science technology for making a better user experience with personalized recommendations. Such as, when you search for something on Amazon, and you started getting suggestions for similar products, so this is because of data science technology. Risk detection: Finance industries always had an issue of fraud and risk of losses, but with the help of data science, this can be rescued. Most of the finance companies are looking for the data scientist to avoid risk and any type of losses with an increase in customer satisfaction.

- 16. Basics: Data Scientist- Job Material Transport: Transport industries also using data science technology to create self-driving cars. With self-driving cars, it will be easy to reduce the number of road accidents. Healthcare: In the healthcare sector, data science is providing lots of benefits. Data science is being used for tumor detection, drug discovery, medical image analysis, virtual medical bots, etc. Recommendation systems: Most of the companies, such as Amazon, Netflix, Google Play, etc., are using data science technology for making a better user experience with personalized recommendations. Such as, when you search for something on Amazon, and you started getting suggestions for similar products, so this is because of data science technology. Risk detection: Finance industries always had an issue of fraud and risk of losses, but with the help of data science, this can be rescued. Most of the finance companies are looking for the data scientist to avoid risk and any type of losses with an increase in customer satisfaction.

- 17. Resume Preparation: Data Scientist- Job Material • Make a great first impression. By customizing your resume for the role you are applying to, you can tell the hiring manager why you’re perfect for it. This will make a good first impression, setting you up for success in your interview. • Stand apart from the crowd. Recruiters skim through hundreds of resumes on any given day. With a clear and impactful data scientist resume, you can differentiate yourself from the crowd. By highlighting your unique combination of skills and experience, you can make an impression beyond the standard checklist of technical skills alone. • Drive the interview conversation. Hiring managers and interviewers often bring your resume to the interview, asking questions based on it. By including the right information in your resume, you can drive the conversation. • Negotiate competitive pay. While a resume might not have a direct impact on the pay, it plays the role of a single source of truth for your qualifications. By including all relevant skills and experience, you can make sure that the offer is reflective of your value to the employer.

- 18. Resume Preparation: Data Scientist- Job Material What Should You Include in Your Data Science Resume? Name and Contact Information Once the recruiter has seen your resume and you’re shortlisted, they would want to contact you. To make this seamless, include your contact information clearly and prominently. But remember that this is simply functional information. So, keep it concise. Double-check that it’s accurate. Include: Name Email ID Phone number LinkedIn, portfolio, or GitHub profiles, if any

- 19. Resume Preparation: Data Scientist- Job Material What Should You Include in Your Data Science Resume? Career Objective/Summary This is often the first section in any resume. As a fresh graduate, without much professional experience, the career objective section acts as an indicator of what you would like to accomplish at the job you’re applying to. On the other hand, if you have some experience, it is better to include a personal profile, summarizing your skills and experiences. A few things to keep in mind while writing your career objective/summary: • Use this section to narrate your professional story, so paragraphs with complete sentences work better than a bulleted list • Mention the years of experience you have • Provide information on the industry, function, and roles you have worked in

- 20. Resume Preparation: Data Scientist- Job Material What Should You Include in Your Data Science Resume? While creating your resume, it is sometimes better to write this section last. Making the rest of your data scientist resume will help hone in on the right summary. Also, remember to customize your summary while applying for the job. Not all jobs are the same, so your summary should reflect what you can do for the particular role you’re applying to Work Experience As a practical field, work experience is more important in data science jobs than theoretical knowledge. Therefore, this is the most crucial part of your resume. If you are a fresh graduate, make sure to include any internships, personal projects, open-source contributions you might have.

- 21. Resume Preparation: Data Scientist- Job Material What Should You Include in Your Data Science Resume? If you’re an experienced data scientist, spend enough time to tell your professional story clearly: o List your work experience in reverse chronological order, with the most recent work listed on top and the others following o Indicate your designation, name of the company, and work period o Write 1-2 lines about what you were responsible for o Include the tasks you performed on a regular basis o Demonstrate outcomes—if you have produced quantifiable results, be sure to include them. For instance: “I built a production prediction engine in Python page that helped reduce crude oil profit loss by 22%” o Add accomplishments like awards and recognitions, if any. Layout-wise, follow consistency within this section. For instance, if you use bullets to list your tasks, use them uniformly across all your job titles.

- 22. Resume Preparation: Data Scientist- Job Material Projects Showing your hiring manager a peek into the work you’ve done is a great way to demonstrate your capabilities. The projects section can be used for that. While deciding which of your projects to include in your resume, consider the following: Relevance. You might have worked on several projects, but the most valuable are the ones that are relevant to the role that you’re applying to. So, pick the most relevant 2-3 projects you’ve worked on. Write a summary. Write 1-2 lines about the business context and your work. It helps to show that you know how to use technical skills to achieve business outcomes. Show technical expertise. Also include a short list of the tools, technologies, and processes you used to complete the project. It is also an option to write a detailed case study of your projects on a blog or Medium and link it here.

- 23. Resume Preparation: Data Scientist- Job Material Skills The first person to see your resume is often a recruiter who might not have the technical skills to evaluate. So, they typically try to match every resume to the job description to identify if the candidate has the skills necessary. Some organizations also use an applicant tracking system (ATS) to automate the screening. Therefore, it is important that your resume list the skills the job description demands. Keep it short Include all the skills you have that the job description demands Even if you have mentioned it in the experience or summary section, repeat it here Education So, keep this section concise and clear. List post-secondary degrees in your education section (i.e., community college, college, and graduate degrees) Include the year of graduation If you’re a fresh graduate, you can mention subjects you’ve studied that are relevant to the job you’re applying to If you have a certification or have completed an online course in data science or related subjects, make sure to include them as well

- 24. Resume Preparation: Data Scientist- Job Material Senior Data Scientist Resume Examples What To Include As a senior data scientist with experience, you would be aiming for a position with more responsibility, like a data science manager, for example. This demands a customized and confident resume. o Customize the resume for the job you’re applying to—highlight relevant skills/experience, mirror the job description o Focus on responsibilities and accomplishments instead of tasks o Include business outcomes you’ve produced with your work o Present case studies of your key projects Why is this resume good? The information is organized in a clear and concise manner giving the entire view of the candidate’s career without overwhelming the reader Each job has quantifiable outcomes, demonstrating the business acumen of the candidate Also subtly hints at leadership skills by mentioning the responsibilities taken in coaching and leading teams

- 25. Resume Preparation: Data Scientist- Job Material Senior Data Scientist Resume Examples

- 26. Basic Interview QnA: Data Scientist- Job Material 1. What is Data Science? Data Science is a combination of algorithms, tools, and machine learning technique which helps you to find common hidden patterns from the given raw data. 2. What is logistic regression in Data Science? Logistic Regression is also called as the logit model. It is a method to forecast the binary outcome from a linear combination of predictor variables. 3. Name three types of biases that can occur during sampling In the sampling process, there are three types of biases, which are: Selection bias Under coverage bias Survivorship bias 4. Discuss Decision Tree algorithm A decision tree is a popular supervised machine learning algorithm. It is mainly used for Regression and Classification. It allows breaks down a dataset into smaller subsets. The decision tree can able to handle both categorical and numerical data.

- 27. Basic Interview QnA: Data Scientist- Job Material 5. What is Prior probability and likelihood? Prior probability is the proportion of the dependent variable in the data set while the likelihood is the probability of classifying a given observant in the presence of some other variable. 6. Explain Recommender Systems? It is a subclass of information filtering techniques. It helps you to predict the preferences or ratings which users likely to give to a product. 7. Name three disadvantages of using a linear model Three disadvantages of the linear model are: The assumption of linearity of the errors. You can’t use this model for binary or count outcomes There are plenty of overfitting problems that it can’t solve

- 28. Basic Interview QnA: Data Scientist- Job Material 8. Why do you need to perform resampling? Resampling is done in below-given cases: Estimating the accuracy of sample statistics by drawing randomly with replacement from a set of the data point or using as subsets of accessible data Substituting labels on data points when performing necessary tests Validating models by using random subsets 9. List out the libraries in Python used for Data Analysis and Scientific Computations. • SciPy • Pandas • Matplotlib • NumPy • SciKit • Seaborn 10. What is Power Analysis? The power analysis is an integral part of the experimental design. It helps you to determine the sample size requires to find out the effect of a given size from a cause with a specific level of assurance. It also allows you to deploy a particular probability in a sample size constraint.

- 29. Basic Interview QnA: Data Scientist- Job Material 11. Explain Collaborative filtering Collaborative filtering used to search for correct patterns by collaborating viewpoints, multiple data sources, and various agents. 12. What is bias? Bias is an error introduced in your model because of the oversimplification of a machine learning algorithm.” It can lead to under-fitting. 13. Discuss ‘Naive’ in a Naive Bayes algorithm? The Naive Bayes Algorithm model is based on the Bayes Theorem. It describes the probability of an event. It is based on prior knowledge of conditions which might be related to that specific event. 14. What is a Linear Regression? Linear regression is a statistical programming method where the score of a variable ‘A’ is predicted from the score of a second variable ‘B’. B is referred to as the predictor variable and A as the criterion variable. 15. State the difference between the expected value and mean value They are not many differences, but both of these terms are used in different contexts. Mean value is generally referred to when you are discussing a probability distribution whereas expected value is referred to in the context of a random variable.

- 30. Basic Interview QnA: Data Scientist- Job Material 11. Explain Collaborative filtering Collaborative filtering used to search for correct patterns by collaborating viewpoints, multiple data sources, and various agents. 12. What is bias? Bias is an error introduced in your model because of the oversimplification of a machine learning algorithm.” It can lead to under-fitting. 13. Discuss ‘Naive’ in a Naive Bayes algorithm? The Naive Bayes Algorithm model is based on the Bayes Theorem. It describes the probability of an event. It is based on prior knowledge of conditions which might be related to that specific event. 14. What is a Linear Regression? Linear regression is a statistical programming method where the score of a variable ‘A’ is predicted from the score of a second variable ‘B’. B is referred to as the predictor variable and A as the criterion variable. 15. State the difference between the expected value and mean value They are not many differences, but both of these terms are used in different contexts. Mean value is generally referred to when you are discussing a probability distribution whereas expected value is referred to in the context of a random variable.

- 31. Basic Interview QnA: Data Scientist- Job Material 16. What the aim of conducting A/B Testing? AB testing used to conduct random experiments with two variables, A and B. The goal of this testing method is to find out changes to a web page to maximize or increase the outcome of a strategy. 17. What is Ensemble Learning? The ensemble is a method of combining a diverse set of learners together to improvise on the stability and predictive power of the model. Two types of Ensemble learning methods are: Bagging Bagging method helps you to implement similar learners on small sample populations. It helps you to make nearer predictions. Boosting Boosting is an iterative method which allows you to adjust the weight of an observation depends upon the last classification. Boosting decreases the bias error and helps you to build strong predictive models. 18. Explain Eigenvalue and Eigenvector Eigenvectors are for understanding linear transformations. Data scientist need to calculate the eigenvectors for a covariance matrix or correlation. Eigenvalues are the directions along using specific linear transformation acts by compressing, flipping, or stretching.

- 32. Basic Interview QnA: Data Scientist- Job Material 19. Define the term cross-validation Cross-validation is a validation technique for evaluating how the outcomes of statistical analysis will generalize for an Independent dataset. This method is used in backgrounds where the objective is forecast, and one needs to estimate how accurately a model will accomplish. 20. Explain the steps for a Data analytics project The following are important steps involved in an analytics project: Understand the Business problem Explore the data and study it carefully. Prepare the data for modeling by finding missing values and transforming variables. Start running the model and analyze the Big data result. Validate the model with new data set. Implement the model and track the result to analyze the performance of the model for a specific period. 21. Discuss Artificial Neural Networks Artificial Neural networks (ANN) are a special set of algorithms that have revolutionized machine learning. It helps you to adapt according to changing input. So the network generates the best possible result without redesigning the output criteria.

- 33. Basic Interview QnA: Data Scientist- Job Material 22. What is Back Propagation? Back-propagation is the essence of neural net training. It is the method of tuning the weights of a neural net depend upon the error rate obtained in the previous epoch. Proper tuning of the helps you to reduce error rates and to make the model reliable by increasing its generalization. 23. What is a Random Forest? Random forest is a machine learning method which helps you to perform all types of regression and classification tasks. It is also used for treating missing values and outlier values. 24. What is the importance of having a selection bias? Selection Bias occurs when there is no specific randomization achieved while picking individuals or groups or data to be analyzed. It suggests that the given sample does not exactly represent the population which was intended to be analyzed. 25. What is the K-means clustering method? K-means clustering is an important unsupervised learning method. It is the technique of classifying data using a certain set of clusters which is called K clusters. It is deployed for grouping to find out the similarity in the data. 26. Explain the difference between Data Science and Data Analytics Data Scientists need to slice data to extract valuable insights that a data analyst can apply to real-world business scenarios. The main difference between the two is that the data scientists have more technical knowledge then business analyst. Moreover, they don’t need an understanding of the business required for data visualization.

- 34. Basic Interview QnA: Data Scientist- Job Material 27. Explain p-value? When you conduct a hypothesis test in statistics, a p-value allows you to determine the strength of your results. It is a numerical number between 0 and 1. Based on the value it will help you to denote the strength of the specific result. 28. Define the term deep learning Deep Learning is a subtype of machine learning. It is concerned with algorithms inspired by the structure called artificial neural networks (ANN). 29. Explain the method to collect and analyze data to use social media to predict the weather condition. You can collect social media data using Facebook, twitter, Instagram’s API’s. For example, for the tweeter, we can construct a feature from each tweet like tweeted date, retweets, list of follower, etc. Then you can use a multivariate time series model to predict the weather condition. 30. When do you need to update the algorithm in Data science? You need to update an algorithm in the following situation: You want your data model to evolve as data streams using infrastructure The underlying data source is changing If it is non-stationarity

- 35. Basic Interview QnA: Data Scientist- Job Material 31. What is Normal Distribution A normal distribution is a set of a continuous variable spread across a normal curve or in the shape of a bell curve. You can consider it as a continuous probability distribution which is useful in statistics. It is useful to analyze the variables and their relationships when we are using the normal distribution curve. 32. Which language is best for text analytics? R or Python? Python will more suitable for text analytics as it consists of a rich library known as pandas. It allows you to use high-level data analysis tools and data structures, while R doesn’t offer this feature. 33. Explain the benefits of using statistics by Data Scientists Statistics help Data scientist to get a better idea of customer’s expectation. Using the statistic method Data Scientists can get knowledge regarding consumer interest, behavior, engagement, retention, etc. It also helps you to build powerful data models to validate certain inferences and predictions. 34. Name various types of Deep Learning Frameworks o Pytorch o Microsoft Cognitive Toolkit o TensorFlow o Caffe o Chainer o Keras

- 36. Basic Interview QnA: Data Scientist- Job Material 35.Explain Auto-Encoder Autoencoders are learning networks. It helps you to transform inputs into outputs with fewer numbers of errors. This means that you will get output to be as close to input as possible. 36. Define Boltzmann Machine Boltzmann machines is a simple learning algorithm. It helps you to discover those features that represent complex regularities in the training data. This algorithm allows you to optimize the weights and the quantity for the given problem. 37. Explain why Data Cleansing is essential and which method you use to maintain clean data Dirty data often leads to the incorrect inside, which can damage the prospect of any organization. For example, if you want to run a targeted marketing campaign. However, our data incorrectly tell you that a specific product will be in- demand with your target audience; the campaign will fail. 38. What is skewed Distribution & uniform distribution? Skewed distribution occurs when if data is distributed on any one side of the plot whereas uniform distribution is identified when the data is spread is equal in the range. 39. When under-fitting occurs in a static model? Under-fitting occurs when a statistical model or machine learning algorithm not able to capture the underlying trend of the data.

- 37. Basic Interview QnA: Data Scientist- Job Material 40. What is reinforcement learning? Reinforcement Learning is a learning mechanism about how to map situations to actions. The end result should help you to increase the binary reward signal. In this method, a learner is not told which action to take but instead must discover which action offers a maximum reward. As this method based on the reward/penalty mechanism. 41. Name commonly used algorithms. Four most commonly used algorithm by Data scientist are: Linear regression Logistic regression Random Forest KNN 42. What is precision? Precision is the most commonly used error metric is n classification mechanism. Its range is from 0 to 1, where 1 represents 100% 43. What is a univariate analysis? An analysis which is applied to none attribute at a time is known as univariate analysis. Boxplot is widely used, univariate model.

- 38. Basic Interview QnA: Data Scientist- Job Material 44. How do you overcome challenges to your findings? In order, to overcome challenges of my finding one need to encourage discussion, Demonstrate leadership and respecting different options. 45. Explain cluster sampling technique in Data science A cluster sampling method is used when it is challenging to study the target population spread across, and simple random sampling can’t be applied. 46. State the difference between a Validation Set and a Test Set A Validation set mostly considered as a part of the training set as it is used for parameter selection which helps you to avoid overfitting of the model being built. While a Test Set is used for testing or evaluating the performance of a trained machine learning model. 47. Explain the term Binomial Probability Formula? “The binomial distribution contains the probabilities of every possible success on N trials for independent events that have a probability of π of occurring.”

- 39. Basic Interview QnA: Data Scientist- Job Material 48. What is a recall? A recall is a ratio of the true positive rate against the actual positive rate. It ranges from 0 to 1. 49. Discuss normal distribution Normal distribution equally distributed as such the mean, median and mode are equal. 50. While working on a data set, how can you select important variables? Explain Following methods of variable selection you can use: Remove the correlated variables before selecting important variables Use linear regression and select variables which depend on that p values. Use Backward, Forward Selection, and Stepwise Selection Use Xgboost, Random Forest, and plot variable importance chart. Measure information gain for the given set of features and select top n features accordingly. 51. Is it possible to capture the correlation between continuous and categorical variable? Yes, we can use analysis of covariance technique to capture the association between continuous and categorical variables. 52. Treating a categorical variable as a continuous variable would result in a better predictive model? Yes, the categorical value should be considered as a continuous variable only when the variable is ordinal in nature. So it is a better predictive model.

- 40. Math Interview QnA: Data Scientist- Job Material Q: When should you use a t-test vs a z-test? A Z-test is a hypothesis test with a normal distribution that uses a z-statistic. A z-test is used when you know the population variance or if you don’t know the population variance but have a large sample size. A T-test is a hypothesis test with a t-distribution that uses a t-statistic. You would use a t-test when you don’t know the population variance and have a small sample size. You can see the image below as a reference to guide which test you should use:

- 41. Math Interview QnA: Data Scientist- Job Material Q:How would you describe what a ‘p-value’ is to a non-technical person? The best way to describe the p-value in simple terms is with an example. In practice, if the p-value is less than the alpha, say of 0.05, then we’re saying that there’s a probability of less than 5% that the result could have happened by chance. Similarly, a p- value of 0.05 is the same as saying “5% of the time, we would see this by chance.”

- 42. Math Interview QnA: Data Scientist- Job Material Q: What is cherry-picking, P-hacking, and significance chasing? Cherry picking refers to the practice of only selecting data or information that supports one’s desired conclusion. P-hacking refers to when one manipulates his/her data collection or analysis until non-significant results become significant. This includes deciding mid-test to not collect anymore data. Significance chasing refers to when a researcher reports insignificant results as if they’re “almost” significant. Q: What is the assumption of normality? The assumption of normality is the sampling distribution is normal and centers around the population parameter, according to the central limit theorem. Q: What is the central limit theorem and why is it so important? The central limit theorem is very powerful — it states that the distribution of sample means approximates a normal distribution. To give an example, you would take a sample from a data set and calculate the mean of that sample. Once repeated multiple times, you would plot all your means and their frequencies onto a graph and see that a bell curve, also known as a normal distribution, has been created. The mean of this distribution will closely resemble that of the original data. The central limit theorem is important because it is used in hypothesis testing and also to calculate confidence intervals.

- 43. Math Interview QnA: Data Scientist- Job Material Q: What is the empirical rule? The empirical rule states that if a dataset is normally distributed, 68% of the data will fall within one standard deviation, 95% of the data will fall within two standard deviations, and 99.7% of the data will fall within 3 standard deviations. Q: What general conditions must be satisfied for the central limit theorem to hold? The data must be sampled randomly The sample values must be independent of each other The sample size must be sufficiently large, generally it should be greater or equal than 30 Q: What is the difference between a combination and a permutation? A permutation of n elements is any arrangement of those n elements in a definite order. There are n factorial (n!) ways to arrange n elements. Note the bold: order matters! The number of permutations of n things taken r-at-a-time is defined as the number of r-tuples that can be taken from n different elements and is equal to the following equation:

- 44. Math Interview QnA: Data Scientist- Job Material On the other hand, combinations refer to the number of ways to choose r out of n objects where order doesn’t matter. The number of combinations of n things taken r-at-a-time is defined as the number of subsets with r elements of a set with n elements and is equal to the following equation: Q: How many permutations does a license plate have with 6 digits?

- 45. Math Interview QnA: Data Scientist- Job Material On the other hand, combinations refer to the number of ways to choose r out of n objects where order doesn’t matter. The number of combinations of n things taken r-at-a-time is defined as the number of subsets with r elements of a set with n elements and is equal to the following equation: Q: How are confidence tests and hypothesis tests similar? How are they different? Confidence intervals and hypothesis testing are both tools used for to make statistical inferences. The confidence interval suggests a range of values for an unknown parameter and is then associated with a confidence level that the true parameter is within the suggested range of. Confidence intervals are often very important in medical research to provide researchers with a stronger basis for their estimations. A confidence interval can be shown as “10 +/- 0.5” or [9.5, 10.5] to give an example. Hypothesis testing is the basis of any research question and often comes down to trying to prove something did not happen by chance. For example, you could try to prove when rolling a dye, one number was more likely to come up than the rest.

- 46. Math Interview QnA: Data Scientist- Job Material Q: What is the difference between observational and experimental data? Observational data comes from observational studies which are when you observe certain variables and try to determine if there is any correlation. Experimental data comes from experimental studies which are when you control certain variables and hold them constant to determine if there is any causality. An example of experimental design is the following: split a group up into two. The control group lives their lives normally. The test group is told to drink a glass of wine every night for 30 days. Then research can be conducted to see how wine affects sleep. Q: Give some examples of some random sampling techniques Simple random sampling requires using randomly generated numbers to choose a sample. More specifically, it initially requires a sampling frame, a list or database of all members of a population. You can then randomly generate a number for each element, using Excel for example, and take the first n samples that you require. Systematic sampling can be even easier to do, you simply take one element from your sample, skip a predefined amount (n) and then take your next element. Going back to our example, you could take every fourth name on the list.

- 47. Math Interview QnA: Data Scientist- Job Material Cluster sampling starts by dividing a population into groups, or clusters. What makes this different that stratified sampling is that each cluster must be representative of the population. Then, you randomly selecting entire clusters to sample. For example, if an elementary school had five different grade eight classes, cluster random sampling might be used and only one class would be chosen as a sample, for example. Stratified random sampling starts off by dividing a population into groups with similar attributes. Then a random sample is taken from each group. This method is used to ensure that different segments in a population are equally represented. To give an example, imagine a survey is conducted at a school to determine overall satisfaction. It might make sense here to use stratified random sampling to equally represent the opinions of students in each department.

- 48. Math Interview QnA: Data Scientist- Job Material Q: What is the difference between type 1 error and type 2 error? A type 1 error is when you incorrectly reject a true null hypothesis. It’s also called a false positive. A type 2 error is when you don’t reject a false null hypothesis. It’s also called a false negative. Q: What is the power of a test? What are two ways to increase the power of a test? The power of a test is the probability of rejecting the null hypothesis when it’s false. It’s also equal to 1 minus the beta. To increase the power of the test, you can do two things: You can increase alpha, but it also increases the chance of a type 1 error Increase the sample size, n. This maintains the type 1 error but reduces type 2. Q: What is the Law of Large Numbers? The Law of Large Numbers is a theory that states that as the number of trials increases, the average of the result will become closer to the expected value. Eg. flipping heads from fair coin 100,000 times should be closer to 0.5 than 100 times. Q: What is the Pareto principle? The Pareto principle, also known as the 80/20 rule states that 80% of the effects come from 20% of the causes. Eg. 80% of sales come from 20% of customers.

- 49. Math Interview QnA: Data Scientist- Job Material Q: What is a confounding variable? A confounding variable, or a confounder, is a variable that influences both the dependent variable and the independent variable, causing a spurious association, a mathematical relationship in which two or more variables are associated but not causally related. Q: What are the assumptions required for linear regression? There are four major assumptions: There is a linear relationship between the dependent variables and the regressors, meaning the model you are creating actually fits the data The errors or residuals of the data are normally distributed and independent from each other There is minimal multicollinearity between explanatory variables Homoscedasticity. This means the variance around the regression line is the same for all values of the predictor variable. Q: What does interpolation and extrapolation mean? Which is generally more accurate? Interpolation is a prediction made using inputs that lie within the set of observed values. Extrapolation is when a prediction is made using an input that’s outside the set of observed values. Generally, interpolations are more accurate.

- 50. Math Interview QnA: Data Scientist- Job Material Q: What does autocorrelation mean? Autocorrelation is when future outcomes depend on previous outcomes. When there is autocorrelation, the errors show a sequential pattern and the model is less accurate. Q: When you sample, what potential biases can you be inflicting? Potential biases include the following: Sampling bias: a biased sample caused by non-random sampling Under coverage bias: sampling too few observations Survivorship bias: error of overlooking observations that did not make it past a form of selection process. Q: What is an outlier? Explain how you might screen for outliers and what would you do if you found them in your dataset. An outlier is a data point that differs significantly from other observations. Depending on the cause of the outlier, they can be bad from a machine learning perspective because they can worsen the accuracy of a model. If the outlier is caused by a measurement error, it’s important to remove them from the dataset.

- 51. Math Interview QnA: Data Scientist- Job Material Q: What is an inlier? An inlier is a data observation that lies within the rest of the dataset and is unusual or an error. Since it lies in the dataset, it is typically harder to identify than an outlier and requires external data to identify them. Should you identify any inliers, you can simply remove them from the dataset to address them. Q: You roll a biased coin (p(head)=0.8) five times. What’s the probability of getting three or more heads? Use the General Binomial Probability formula to answer this question: p = 0.8 n = 5 k = 3,4,5 P(3 or more heads) = P(3 heads) + P(4 heads) + P(5 heads) = 0.94 or 94%

- 52. Math Interview QnA: Data Scientist- Job Material Q: A random variable X is normal with mean 1020 and a standard deviation 50. Calculate P(X>1200) Using Excel… p =1-norm.dist(1200, 1020, 50, true) p= 0.000159 Q: Consider the number of people that show up at a bus station is Poisson with mean 2.5/h. What is the probability that at most three people show up in a four hour period? x = 3 mean = 2.5*4 = 10 using Excel… p = poisson.dist(3,10,true) p = 0.010336 Q: Suppose that diastolic blood pressures (DBPs) for men aged 35–44 are normally distributed with a mean of 80 (mm Hg) and a standard deviation of 10. About what is the probability that a random 35–44 year old has a DBP less than 70? Since 70 is one standard deviation below the mean, take the area of the Gaussian distribution to the left of one standard deviation. = 2.3 + 13.6 = 15.9%

- 53. Math Interview QnA: Data Scientist- Job Material Q: Give an example where the median is a better measure than the mean When there are a number of outliers that positively or negatively skew the data. Q: Given two fair dices, what is the probability of getting scores that sum to 4? to 8? There are 4 combinations of rolling a 4 (1+3, 3+1, 2+2): P(rolling a 4) = 3/36 = 1/12 There are combinations of rolling an 8 (2+6, 6+2, 3+5, 5+3, 4+4): P(rolling an 8) = 5/36 Q: If a distribution is skewed to the right and has a median of 30, will the mean be greater than or less than 30? If the given distribution is a right-skewed distribution, then the mean should be greater than 30, while the mode remains to be less than 30.

- 54. SQL Interview QnA: Data Scientist- Job Material MySQL Create Table Example Below is a MySQL example to create a table in database: CREATE TABLE IF NOT EXISTS `MyFlixDB`.`Members` ( `membership_number` INT AUTOINCREMENT , `full_names` VARCHAR(150) NOT NULL , `gender` VARCHAR(6) , `date_of_birth` DATE , `physical_address` VARCHAR(255) , `postal_address` VARCHAR(255) , `contact_number` VARCHAR(75) , `email` VARCHAR(255) , PRIMARY KEY (`membership_number`) ) ENGINE = InnoDB;

- 55. SQL Interview QnA: Data Scientist- Job Material Let’s see a query for creating a table which has data of all data types. Study it and identify how each data type is defined in the below create table MySQL example. CREATE TABLE`all_data_types` ( `varchar` VARCHAR( 20 ) , `tinyint` TINYINT , `text` TEXT , `date` DATE , `smallint` SMALLINT , `mediumint` MEDIUMINT , `int` INT , `bigint` BIGINT , `float` FLOAT( 10, 2 ) , `double` DOUBLE , `decimal` DECIMAL( 10, 2 ) , `datetime` DATETIME , `timestamp` TIMESTAMP , `time` TIME , `year` YEAR , `char` CHAR( 10 ) , `tinyblob` TINYBLOB , `tinytext` TINYTEXT , `blob` BLOB , `mediumblob` MEDIUMBLOB , `mediumtext` MEDIUMTEXT , `longblob` LONGBLOB , `longtext` LONGTEXT , `enum` ENUM( '1', '2', '3' ) , `set` SET( '1', '2', '3' ) , `bool` BOOL , `binary` BINARY( 20 ) , `varbinary` VARBINARY( 20 ) ) ENGINE= MYISAM ;

- 56. SQL Interview QnA: Data Scientist- Job Material 1. What is DBMS? A Database Management System (DBMS) is a program that controls creation, maintenance and use of a database. DBMS can be termed as File Manager that manages data in a database rather than saving it in file systems. 2. What is RDBMS? RDBMS stands for Relational Database Management System. RDBMS store the data into the collection of tables, which is related by common fields between the columns of the table. It also provides relational operators to manipulate the data stored into the tables. Example: SQL Server. 3. What is SQL? SQL stands for Structured Query Language , and it is used to communicate with the Database. This is a standard language used to perform tasks such as retrieval, updation, insertion and deletion of data from a database. Standard SQL Commands are Select.

- 57. SQL Interview QnA: Data Scientist- Job Material 4. What is a Database? Database is nothing but an organized form of data for easy access, storing, retrieval and managing of data. This is also known as structured form of data which can be accessed in many ways. Example: School Management Database, Bank Management Database. 5. What are tables and Fields? A table is a set of data that are organized in a model with Columns and Rows. Columns can be categorized as vertical, and Rows are horizontal. A table has specified number of column called fields but can have any number of rows which is called record. Example:. Table: Employee. Field: Emp ID, Emp Name, Date of Birth. Data: 201456, David, 11/15/1960.

- 58. SQL Interview QnA: Data Scientist- Job Material 6. What is a primary key? A primary key is a combination of fields which uniquely specify a row. This is a special kind of unique key, and it has implicit NOT NULL constraint. It means, Primary key values cannot be NULL. 7. What is a unique key? A Unique key constraint uniquely identified each record in the database. This provides uniqueness for the column or set of columns. A Primary key constraint has automatic unique constraint defined on it. But not, in the case of Unique Key. There can be many unique constraint defined per table, but only one Primary key constraint defined per table. 8. What is a foreign key? A foreign key is one table which can be related to the primary key of another table. Relationship needs to be created between two tables by referencing foreign key with the primary key of another table. 9. What is a join? This is a keyword used to query data from more tables based on the relationship between the fields of the tables. Keys play a major role when JOINs are used.

- 59. SQL Interview QnA: Data Scientist- Job Material 10. What are the types of join and explain each? There are various types of join which can be used to retrieve data and it depends on the relationship between tables. Inner Join. Inner join return rows when there is at least one match of rows between the tables. Right Join. Right join return rows which are common between the tables and all rows of Right hand side table. Simply, it returns all the rows from the right hand side table even though there are no matches in the left hand side table. Left Join. Left join return rows which are common between the tables and all rows of Left hand side table. Simply, it returns all the rows from Left hand side table even though there are no matches in the Right hand side table. Full Join. Full join return rows when there are matching rows in any one of the tables. This means, it returns all the rows from the left hand side table and all the rows from the right hand side table. 11. What is normalization? Normalization is the process of minimizing redundancy and dependency by organizing fields and table of a database. The main aim of Normalization is to add, delete or modify field that can be made in a single table.

- 60. SQL Interview QnA: Data Scientist- Job Material 12. What is Denormalization? DeNormalization is a technique used to access the data from higher to lower normal forms of database. It is also process of introducing redundancy into a table by incorporating data from the related tables. 14. What is a View? A view is a virtual table which consists of a subset of data contained in a table. Views are not virtually present, and it takes less space to store. View can have data of one or more tables combined, and it is depending on the relationship. 15. What is an Index? An index is performance tuning method of allowing faster retrieval of records from the table. An index creates an entry for each value and it will be faster to retrieve data. What is a trigger? A DB trigger is a code or programs that automatically execute with response to some event on a table or view in a database. Mainly, trigger helps to maintain the integrity of the database. Example: When a new student is added to the student database, new records should be created in the related tables like Exam, Score and Attendance tables.

- 61. SQL Interview QnA: Data Scientist- Job Material What is the difference between DELETE and TRUNCATE commands? DELETE command is used to remove rows from the table, and WHERE clause can be used for conditional set of parameters. Commit and Rollback can be performed after delete statement. TRUNCATE removes all rows from the table. Truncate operation cannot be rolled back. What are local and global variables and their differences? Local variables are the variables which can be used or exist inside the function. They are not known to the other functions and those variables cannot be referred or used. Variables can be created whenever that function is called. Global variables are the variables which can be used or exist throughout the program. Same variable declared in global cannot be used in functions. Global variables cannot be created whenever that function is called. What is the difference between TRUNCATE and DROP statements? TRUNCATE removes all the rows from the table, and it cannot be rolled back. DROP command removes a table from the database and operation cannot be rolled back.

- 62. SQL Interview QnA: Data Scientist- Job Material What are aggregate and scalar functions? Aggregate functions are used to evaluate mathematical calculation and return single values. This can be calculated from the columns in a table. Scalar functions return a single value based on the input value. Example -. Aggregate – max(), count – Calculated with respect to numeric. Scalar – UCASE(), NOW() – Calculated with respect to strings. How can you create an empty table from an existing table? Example will be -. Select * into studentcopy from student where 1=2 Here, we are copying student table to another table with the same structure with no rows copied. How to fetch common records from two tables? Common records result set can be achieved by -. Select studentID from student INTERSECT Select StudentID from Exam

- 63. SQL Interview QnA: Data Scientist- Job Material How to fetch alternate records from a table? Records can be fetched for both Odd and Even row numbers - To display even numbers-. Select studentId from (Select rowno, studentId from student) where mod(rowno,2)=0 To display odd numbers-. Select studentId from (Select rowno, studentId from student) where mod(rowno,2)=1 How to select unique records from a table? Select unique records from a table by using DISTINCT keyword. Select DISTINCT StudentID, StudentName from Student. What is the command used to fetch first 5 characters of the string? There are many ways to fetch first 5 characters of the string -. Select SUBSTRING(StudentName,1,5) as studentname from student Select LEFT(Studentname,5) as studentname from student

- 64. SQL Interview QnA: Data Scientist- Job Material Which operator is used in query for pattern matching? LIKE operator is used for pattern matching, and it can be used as -. 1. % – Matches zero or more characters. 2. _(Underscore) – Matching exactly one character. Example - Select * from Student where studentname like 'a%' Select * from Student where studentname like 'ami_‘ Summary • Creating a database involves translating the logical database design model into the physical database. • MySQL supports a number of data types for numeric, dates and strings values. • CREATE DATABASE command is used to create a database • CREATE TABLE command is used to create tables in a database • MySQL workbench supports forward engineering which involves automatically generating SQL scripts from the logical database model that can be executed to create the physical database

- 65. MS Excel Interview QnA: Data Scientist- Job Material

- 66. MS Excel Interview QnA: Data Scientist- Job Material What are the common data formats in Microsoft Excel? “The most common data formats used in Microsoft Excel are numbers, percentages, dates and sometimes texts (as in words and strings of texts).” How are these data formats used in Microsoft Excel? “Numbers can be formatted in data cells as decimals or round values. Percentages show a part of a whole and the whole being 100%. The dates can automatically change depending on the region and location Microsoft Excel is connected from. And the text format is used when analyses, reports or other documents are entered into the Excel spreadsheet as data.” What are the cell references? “Cell references are used to refer to data located in the same Excel spreadsheet but to data in a different cell. There are three different cell reference types: Absolute, relative and mixed cell references.” What are the functions of different cell references?

- 67. MS Excel Interview QnA: Data Scientist- Job Material The absolute cell reference forces the data to stay in the cell which it was put in. No matter how many formulas are used on the data itself, an absolute cell reference stays with the data. The relative cell reference moves with the cell when the formula on the cell is moved to another one. And the mixed cell reference indicates that the row or the column related to the data cell is changed or moved. Which key or combination of keys allow you to toggle between the absolute, relative and mixed cell references? A sample answer on the key to press to shift between the cell references can be: “In Windows devices the F4 key lets you to change the cell references. In Mac devices the combination of keys Command + T will allow you this shift.” What is the function of the dollar sign ($) in Microsoft Excel? “The dollar sign when written tells Excel whether to change the location of the reference or not, if the formula for it is copied to other cells.” What is the LOOKUP function in Microsoft Excel? “The LOOKUP function allows the user to find exact or partial matches in the spreadsheet. The VLOOKUP option lets the user search for data located in the vertical position. The HLOOKUP option functions the same way but in the horizontal plane.”

- 68. MS Excel Interview QnA: Data Scientist- Job Material What is conditional formatting? “Conditional formatting allows you to change the visual aspect of cells. For example, you want all the cells which include a value of 3 to be highlighted with a yellow highlighter and made italic. Conditional formatting lets you achieve this action in only seconds.” What are the most important functions of Excel to you as a business analyst? “I most often use the LOOKUP function; followed by COUNT and COUNTA functions. The IF and MAX and MIN functions are also one of the ones I usually use.” What does the COUNTA function execute in an Excel spreadsheet? “The COUNTA can scan all the rows and columns that contain data, identify them and ignore the empty cells.” Can you import data from other software into an Excel spreadsheet? “Importing data from various external data sources into an Excel spreadsheet is available. Just go into the ‘Data tab above in the toolbar. And by clicking the ‘Get External Data’ button, you will be able to import data from other software into Excel.”

- 69. MS Excel Interview QnA: Data Scientist- Job Material Why do you think the knowledge of Microsoft Excel is important for a business analyst? Using Excel and dealing with the company’s data is crucial because it’s the only data the organization has. And it is in the hands of the business analyst to analyze and come up with results and solutions for problems. The business analyst is also the financial consultant as well as an analyst. You can become the person that the CEO listens to in order to ‘make’ or ‘break’ certain deals.” As a business analyst, do you choose to store your sensitive data in a Microsoft Excel spreadsheet? “Yes, I do store my client’s data in Microsoft Excel. However, if the data I am dealing with is confidential, then I would not be storing that sensitive data in an Excel file.” As a business analyst, how would you operate with sensitive data in Microsoft Excel? “Due to the fact that I would be responsible for the transfer, the possible disappearance or the leak of the data, I would store confidential data in a software other than Microsoft Excel.” How can you protect your data in Microsoft Excel? “From the Review tab, you can choose to protect your sheet with a password. That way the spreadsheet will be password protected and cannot be opened or copied without the password.”

- 70. MS Excel Interview QnA: Data Scientist- Job Material

- 71. Python Interview QnA: Data Scientist- Job Material Q1. What built-in data types are used in Python? Python uses several built-in data types, including: Number (int, float and complex) String (str) Tuple (tuple) Range (range) List (list) Set (set) Dictionary (dict) In Python, data types are used to classify or categorize data, and every value has a data type Q2. How are data analysis libraries used in Python? What are some of the most common libraries? A key reason Python is such a popular data science programming language is because there is an extensive collection of data analysis libraries available. These libraries include functions, tools and methods for managing and analyzing data. There are Python libraries for performing a wide range of data science functions, including processing image and textual data, data mining and data visualization. The most widely used Python data analysis libraries include:

- 72. Python Interview QnA: Data Scientist- Job Material o Pandas o NumPy o SciPy o TensorFlow o SciKit o Seaborn o Matplotlib Q3. How is a negative index used in Python? Negative indexes are used in Python to assess and index lists and arrays from the end, counting backwards. For example, n-1 will show the last item in a list, while n-2 will show the second to last. Here’s an example of a negative index in Python: b = "Python Coding Fun" print(b[-1]) >> n

- 73. Python Interview QnA: Data Scientist- Job Material Q4. What’s the difference between lists and tuples in Python? Lists and tuples are classes in Python that store one or more objects or values. Key differences include: Syntax – Lists are enclosed in square brackets and tuples are enclosed in parentheses. Mutable vs. Immutable – Lists are mutable, which means they can be modified after being created. Tuples are immutable, which means they cannot be modified. Operations – Lists have more functionalities available than tuples, including insert and pop operations and sorting. Size – Because tuples are immutable, they require less memory and are subsequently faster. Python Statistics Questions Python statistics questions are based on implementing statistical analyses and testing how well you know statistical concepts and can translate them into code. Many times, these questions take the form of random sampling from a distribution, generating histograms, and computing different statistical metrics such as standard deviation, mean, or median.

- 74. Python Interview QnA: Data Scientist- Job Material Q5. Write a function to generate N samples from a normal distribution and plot them on the histogram. This is a relatively simple problem. We have to set up our distribution and generate N samples from it, which are then plotted. In this question, we make use of the SciPy library which is a library made for scientific computing. Q6. Write a function that takes in a list of dictionaries with a key and list of integers and returns a dictionary with the standard deviation of each list. Note that this should be done without using the NumPy built-in functions. Example:

- 75. Python Interview QnA: Data Scientist- Job Material Hint: Remember the equation for standard deviation. To be able to fulfill this function, we need to use the following equation, where we take the sum of the square of the data value minus the mean, over the total number of data points, all in a square root. Does the function inside the square root look familiar? Q7. Given a list of stock prices in ascending order by datetime, write a function that outputs the max profit by buying and selling at a specific interval. Example: stock_prices = [10,5,20,32,25,12] get_max_profit(stock_prices) -> 27 Making it harder, given a list of stock prices and date times in ascending order by datetime, write a function that outputs the profit and start and end dates to buy and sell for max profit.

- 76. Python Interview QnA: Data Scientist- Job Material stock_prices = [10,5,20,32,25,12] dts = [ '2019-01-01', '2019-01-02', '2019-01-03', '2019-01-04', '2019-01-05', '2019-01-06', ] get_profit_dates(stock_prices,dts) -> (27, '2019-01-02', '2019-01-04') Hint: There are several ways to solve this problem. But a good place to start is by thinking about your goal: If we want to maximize profit, ideally we would want to buy at the lowest price and sell at the highest possible price.

- 77. Python Interview QnA: Data Scientist- Job Material Python Probability Questions Most Python questions that involve probability are testing your knowledge of the probability concept. These questions are really similar to the Python statistics questions except they are focused on simulating concepts like Binomial or Bayes theorem. Since most general probability questions are focused around calculating chances based on a certain condition, almost all of these probability questions can be proven by writing Python to simulate the case problem. Q8. Amy and Brad take turns rolling a fair six-sided die. Whoever rolls a “6” first wins the game. Amy starts by rolling first. What’s the probability that Amy wins? Given this scenario, we can write a Python function that can simulate this scenario thousands of times to see how many times Amy wins first. Solving this problem then requires understanding how to create two separate people and simulate the scenario of one person rolling first each time.

- 78. Python Interview QnA: Data Scientist- Job Material Python Probability Questions Most Python questions that involve probability are testing your knowledge of the probability concept. These questions are really similar to the Python statistics questions except they are focused on simulating concepts like Binomial or Bayes theorem. Since most general probability questions are focused around calculating chances based on a certain condition, almost all of these probability questions can be proven by writing Python to simulate the case problem. Q8. Amy and Brad take turns rolling a fair six-sided die. Whoever rolls a “6” first wins the game. Amy starts by rolling first. What’s the probability that Amy wins? Given this scenario, we can write a Python function that can simulate this scenario thousands of times to see how many times Amy wins first. Solving this problem then requires understanding how to create two separate people and simulate the scenario of one person rolling first each time.

- 79. Python Interview QnA: Data Scientist- Job Material Name mutable and immutable objects. The mutability of a data structure is the ability to change the portion of the data structure without having to recreate it. Mutable objects are lists, sets, values in a dictionary. Immutability is the state of the data structure that cannot be changed after its creation. Immutable objects are integers, strings, float, bool, tuples, keys of a dictionary. What are compound data types and data structures? The data type that is constructed using simple, primitive, and basic data types are compound data types. Data Structures in Python allow us to store multiple observations. These are lists, tuples, sets, and dictionaries. List: Lists are enclosed with in square [] Lists are mutable, that is their elements and size can be changed. Lists are slower than tuples. Example: [‘A’, 1, ‘i’] Tuple: Tuples are enclosed in parentheses () Tuples are immutable i.e cannot be edited. Tuples are faster than lists. Tuples must be used when the order of the elements of a sequence matters. Example: (‘Twenty’, 20, ‘XX’)

- 80. Python Interview QnA: Data Scientist- Job Material What is the difference between a list and a tuple? List: Lists are enclosed with in square [] Lists are mutable, that is their elements and size can be changed. Lists are slower than tuples. Example: [‘A’, 1, ‘i’] What is the difference between is and ‘==’ ? ‘==’ checks for equality between the variables, and ‘is’ checks for the identity of the variables. What is the difference between indexing and slicing? Indexing is extracting or lookup one or particular values in a data structure, whereas slicing retrieves a sequence of elements What is the lambda function? Lambda functions are an anonymous or nameless function. These functions are called anonymous because they are not declared in the standard manner by using the def keyword. It doesn’t require the return keyword as well. These are implicit in the function. The function can have any number of parameters but can have just one statement and return just one value in the form of an expression. They cannot contain commands or multiple expressions. An anonymous function cannot be a direct call to print because lambda requires an expression. Lambda functions have their own local namespace and cannot access variables other than those in their parameter list and those in the global namespace. Example: x = lambda i,j: i+j print(x(7,8)) Output: 15

- 81. Machine Learning: Data Scientist- Job Material What is a default value? Default argument means the function will take the default parameter value if the user has not given any predefined parameter value. What is the difference between lists and arrays? An array is a data structure that contains a group of elements where the elements are of the same data type, e.g., integer, string. The array elements share the same variable name, but each element has its own unique index number or key. The purpose is to organize the data so that the related set of values can be easily sorted or searched. How do I prepare for a Python interview? There is no one way to prepare for the Python interview. Knowing the basics can never be discounted. It is necessary to know at least the following topics for python interview questions for data science: You must have command over the basic control flow that is for loops, while loops, if-else-elif statements. You must know how to write all these by hand. A solid foundation of the various data types and data structures that Python offers. How, where, when and why to use each of the strings, lists, tuples, dictionaries, sets. It is must to know how to iterate over each of these. You must know how to use a list comprehension, dictionary comprehension, and how to write a function. Use of lambda functions especially with map, reduce and filter. If needed you must be able to discuss how you have used Python, where all have you used to solve common problems such as Fibonacci series, generate Armstrong numbers. Be thorough with Pandas, its various functions. Also, be well versed with various libraries for visualization, scientific & computational purposes, and Machine Learning.

- 82. Python Interview QnA: Data Scientist- Job Material What is Machine Learning? Machine Learning is making the computer learn from studying data and statistics. Machine Learning is a step into the direction of artificial intelligence (AI). Machine Learning is a program that analyses data and learns to predict the outcome Data Set In the mind of a computer, a data set is any collection of data. It can be anything from an array to a complete database. Example of an array: [99,86,87,88,111,86,103,87,94,78,77,85,86] Numerical data are numbers, and can be split into two numerical categories: Discrete Data - numbers that are limited to integers. Example: The number of cars passing by. Continuous Data - numbers that are of infinite value. Example: The price of an item, or the size of an item

- 83. Python Interview QnA: Data Scientist- Job Material What are Mean, Median, and Mode? speed = [99,86,87,88,111,86,103,87,94,78,77,85,86] Mean - The average value. To calculate the mean, find the sum of all values, and divide the sum by the number of values: Median The median value is the value in the middle, after you have sorted all the values: Mode The Mode value is the value that appears the most number of times:

- 84. Python Interview QnA: Data Scientist- Job Material What is Standard Deviation? Standard deviation is a number that describes how spread out the values are. A low standard deviation means that most of the numbers are close to the mean (average) value. A high standard deviation means that the values are spread out over a wider range. This time we have registered the speed of 7 cars: speed = [86,87,88,86,87,85,86] The standard deviation is: 0.9 Meaning that most of the values are within the range of 0.9 from the mean value, which is 86.4. import numpy speed = [86,87,88,86,87,85,86] x = numpy.std(speed) print(x)

- 85. Python Interview QnA: Data Scientist- Job Material Variance Variance is another number that indicates how spread out the values are. In fact, if you take the square root of the variance, you get the standard deviation! Or the other way around, if you multiply the standard deviation by itself, you get the variance! To calculate the variance you have to do as follows:1. Find the mean: (32+111+138+28+59+77+97) / 7 = 77.4

- 86. Python Interview QnA: Data Scientist- Job Material Standard Deviation As we have learned, the formula to find the standard deviation is the square root of the variance: √1432.25 = 37.85 import numpy speed = [32,111,138,28,59,77,97] x = numpy.std(speed) print(x) What are Percentiles? Percentiles are used in statistics to give you a number that describes the value that a given percent of the values are lower than. Let's say we have an array of the ages of all the people that lives in a street. What is the age that 90% of the people are younger than? import numpy ages = [5,31,43,48,50,41,7,11,15,39,80,82,32,2,8,6,25,36,27,61,31] x = numpy.percentile(ages, 90) print(x)

- 87. Machine Learning QnA: Data Scientist- Job Material

- 88. Machine Learning QnA: Data Scientist- Job Material

- 89. Machine Learning QnA: Data Scientist- Job Material

- 90. Machine Learning QnA: Data Scientist- Job Material

- 91. Machine Learning QnA: Data Scientist- Job Material

- 92. Machine Learning QnA: Data Scientist- Job Material What is Machine Learning? Machine Learning (ML) is that field of computer science with the help of which computer systems can provide sense to data in much the same way as human beings do. In simple words, ML is a type of artificial intelligence that extract patterns out of raw data by using an algorithm or method. The main focus of ML is to allow computer systems learn from experience without being explicitly programmed or human intervention. What are Different Types of Machine Learning algorithms? There are various types of machine learning algorithms. Here is the list of them in a broad category based on: Whether they are trained with human supervision (Supervised, unsupervised, reinforcement learning)

- 93. Machine Learning QnA: Data Scientist- Job Material What is Supervised Learning? Supervised learning is a machine learning algorithm of inferring a function from labeled training data. The training data consists of a set of training examples. Example: 01 Knowing the height and weight identifying the gender of the person. Below are the popular supervised learning algorithms. • Support Vector Machines • Regression • Naive Bayes • Decision Trees • K-nearest Neighbour Algorithm and Neural Networks. Example: 02 If you build a T-shirt classifier, the labels will be “this is an S, this is an M and this is L”, based on showing the classifier examples of S, M, and L.