AI Ethical Framework.pptx

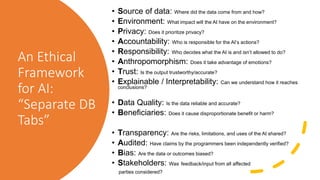

- 1. An Ethical Framework for AI: “Separate DB Tabs” • Source of data: Where did the data come from and how? • Environment: What impact will the AI have on the environment? • Privacy: Does it prioritize privacy? • Accountability: Who is responsible for the AI’s actions? • Responsibility: Who decides what the AI is and isn’t allowed to do? • Anthropomorphism: Does it take advantage of emotions? • Trust: Is the output trustworthy/accurate? • Explainable / Interpretability: Can we understand how it reaches conclusions? • Data Quality: Is the data reliable and accurate? • Beneficiaries: Does it cause disproportionate benefit or harm? • Transparency: Are the risks, limitations, and uses of the AI shared? • Audited: Have claims by the programmers been independently verified? • Bias: Are the data or outcomes biased? • Stakeholders: Was feedback/input from all affected parties considered?

- 2. Source of Data Understanding how the organization that created the AI collected the data it used to train the AI model is important. It can have both ethical and legal implications. 1. Where did the data come from and how did they get it? 2. Is the data representative of the population it's trying to model, or did the organization only gather data that was convenient to collect? 3. Who participated in the data creation? 4. Who is left out of the datasets? 5. Who chose which data to collect? 6. Did the sources provide informed consent to use their data for the purposes of the model?

- 3. Environment The pros of using AI can't be overlooked, but the cost it has on the environment is unavoidable. The good of a modern technology is only a temporary benefit if it's significantly outweighed by the damage done to the planet. 1. What impact will the AI have on the environment? 2. How much electricity will the AI need? 3. Where will the electricity come from (oil, gas, solar, wind, etc.) 4. How can energy usage be reduced? 5. How much pollution does the AI generate? 6. How much water waste does the AI create?

- 4. Privacy If AI models are cultivated using datasets containing private information, further inspection is necessary to preserve peoples' privacy. 1. What security is in place to prevent Personal Identifying Information (PII), health information, and/or financial information from leaking? 2. Who can access the PII? 3. Do people have a write to having their PII removed from the model? 4. Does the AI contribute to what may be privacy-related torts (intrusion on seclusion, false light, public disclosure of private facts, and appropriation of name or likeness?

- 5. Accountability Regardless of whether the regulatory scheme is based on self- regulation or one imposed by a government, there must be clear accountability for the scheme to be effective. 1. Who should ultimately be responsible for the AI’s actions? The user? The developers of the AI? The company? The executives? The board of directors? 2. How will the user get justice if the AI harms them? 3. Is there someone who oversees and "owns" the AI development within the company?

- 6. Responsibility Society must determine who should get to make decisions regarding AI that impacts society. For example, should it belong solely to profit-driven private individuals? Or should there be more democratic and public input? 1. Who should decide what AI should and should not be allowed to do? The engineers? The executives at the company? The Board of the company? The government? 2. Who should have access to advanced AI? Should everyone be trusted equally? 3. How do we monitor it's not used for harmful activities?

- 7. Anthropomorphism As numerous studies and common interactions with smart speakers and chatbots reveal, humans are quick to anthropomorphize AI by giving them pronouns and interpreting its actions as being driven by intent. It's not difficult to think how this tendency could be used to convince people to take actions they may not have otherwise taken. 1. Does the AI take advantage of user emotions? For example, does it try to come across as human or as a sympathetic creature so the user will spend more money on something? 2. Is there a reason the AI company chooses to make the AI seem human? For example, why give a speaker a human voice rather than one that is more alien or robotic? Is that the best alternative?

- 8. Trust One of the primary purposes for using AI is for it to give us reliable results. However, some advanced AI systems, like LLMs, have shown that a response from a complex AI doesn't necessarily equate to precision, as they are capable of creating fictional facts and URLs. 1. Are the outputs of the AI accurate? That is, do they accurately reflect facts and reality? 2. How can we be sure an LLM isn't hallucinating?

- 9. Explainable / Interpretable It's vital the AI can explain in sufficient detail how it reached its conclusion. This is especially relevant when AIs make decisions that can impact others' livelihoods, such as with finances or healthcare. A black box that humans are told to place their faith in is not sufficient. 1. Can we interrogate the AI? 2. Can the AI accurately explain why it reached its output? 3. Can the AI explain how it reached its output? 4. Explainable AI should consider who it should be explainable for. For example, AI for medicine should be explainable to both doctors and patients. 5. Are doctors using risk scores to triage patients able to interpret a risk score correctly? Are bankers able to interpret AI ratings of loan applicants? 6. Is the system output only a single score or does it also output potential decision explanations and guidance for how to use the score?

- 10. Data Quality Apart from the method of collecting data (reference: Source of data, above), it is imperative to take the data quality into consideration. Entering bad data into a model will result in an unreliable model that could have dangerous implications. Garbage in, garbage out. 1. Is the data reliable and accurate? 2. Is there any measurement error (for example, from incomplete or incorrect data)? 3. Did selection bias play a role in data collection? Selection bias can occur if the subset of individuals represented in the training data is not representative of the patient population of interest. 4. Does the AI model overfit because it was trained on too little data? 5. Are historical biases being kept alive via the AI? 6. Are some ethnicities, races, nationalities, or other populations inappropriately missing from the data? For example, text scraped from the web may overrepresent people who can afford internet access and have the time to post a comment. 7. Check to ensure the data between groups is substantially similar. If it’s not, you may get inaccurate and unfair results. This happens with credit ratings when poorer folks have less credit history, so they are given lower scores, which makes it harder to work up to higher scores.

- 11. Beneficiaries Because the most advanced AI models require hundreds of millions of dollars to create, only the already-wealthy can participate in its creation. This severely limits who can create the AI and naturally forms a dominant oligopoly as the success of the advanced AI makes the already-wealthy companies wealthier. The same effect likely happens on every scale regarding use of the AI as well. Entities that can afford to use the AI will benefit more than those who can't use the AI, which extends the advantage of the entities that are already relatively wealthy. 1. Do the rich get richer at the expense of the less fortunate? Do individuals benefit? Or certain communities? Or certain nations? 2. Is there inequitable access to the AI (e.g., low SES people may go to hospitals that don’t have the latest and greatest AI; low SES students may not have the best AI in their schools)? 3. Is there any issue with Fortune 500 corporations growing their profits to benefit a relatively small number of people (investors) by replacing human workers with AI? Or is this a feature of capitalism, not a bug?

- 12. Transparency Transparency is being open and clear with the user about how the AI should be used, when the AI is being used, the limitations of the AI, potential benefits and harms, etc. In short, the AI should not be a secret to the user. 1. Are the kinds of harms an AI system might cause, the shortcomings the AI may suffer from, the varying degrees of severity of the harms, the limitations of the technology, how and when it’s being used, and so on communicated to the user? 2. If the AI creates visualizations, like charts, does it include context, known unknowns and uncertainties, the source of the data, and how the source data was collected? 3. Examples of limitations the AI may need to disclose: (a) AI systems are parametric, meaning the same data run through the same system could yield a different result each time. (b) The AI can only see what’s in the data (text, video, image, rows and columns, etc.). It doesn’t know the context around it (e.g., maybe someone had high blood pressure because a loved one recently died). 4. Do all interfaces of the AI that make decisions about users clearly and succinctly inform users what is going on, how it works, how long the outputs are useful, and why the AI was needed to reach the output as opposed to any other method? 5. Does the AI system allow users to obtain the information it has about them, and let the users dispute that information if the users believe it's inaccurate?

- 13. Audited It's one thing for a company to make claims about the safety, security, accuracy, and impact of their AI. It's another to have an independent third-party audit and verify those claims. With advanced AI it may be better to verify, then trust. 1. Have independent auditors reviewed the data and source code to verify it is and does what it says it is/will do? 2. Is the AI model independently audited on at least an annual basis? 3. Can we be certain the claims made by the developers are accurate and reliable? For example, that the data isn't biased, that the outputs are trustworthy, and that the environmental impact is no greater than claimed?

- 14. Bias As seen in hiring, promotions, image recognition, allocation of resources, and in criminal justice, among many, many other areas, AI is fallible. The developers may have the best intentions and the algorithm may appear entirely neutral, but if the outcomes are biased, or if the data used to train the data was gathered during more biased times in history (against people of certain races, genders, sexual orientations, etc.), then the AI should face greater scrutiny. 1. Is the data biased to favor one group over another based on an irrelevant or protected class? 2. Were various forms of bias explored (e.g., disparate impact, statistical parity, equal opportunity, and/or average odds), or did the company only publish the most flattering analyses? 3. Does the AI perpetuate societal biases?

- 15. Stakeholders Representatives from all groups who may be affected by the AI should be consulted during the AI's development prior to its release. Stakeholders should include even those the AI may affect who won't even know of the AI's existence and will never interact with it directly because they could be affected indirectly. 1. Did the AI company get feedback from a representative sample of everyone the AI will affect? 2. Are the values of the users, the developers, and the society in which the AI is used align?