Practical Petabyte Pushing

•Download as PPTX, PDF•

1 like•994 views

Tiny slide deck from a 5-min lightning talk covering a recent project involving live replication of 2-petabytes of scientific data. Please leave feedback if you'd like to see this as a long-form technical blog article or conference talk, thanks!

Report

Share

Report

Share

Recommended

Recommended

More Related Content

What's hot

What's hot (20)

Bio-IT & Cloud Sobriety: 2013 Beyond The Genome Meeting

Bio-IT & Cloud Sobriety: 2013 Beyond The Genome Meeting

Big Data and Fast Data – Big and Fast Combined, is it Possible?

Big Data and Fast Data – Big and Fast Combined, is it Possible?

Strata 2017 (San Jose): Building a healthy data ecosystem around Kafka and Ha...

Strata 2017 (San Jose): Building a healthy data ecosystem around Kafka and Ha...

Big Data and Fast Data - big and fast combined, is it possible?

Big Data and Fast Data - big and fast combined, is it possible?

5 Factors Impacting Your Big Data Project's Performance

5 Factors Impacting Your Big Data Project's Performance

Guest Lecture: Introduction to Big Data at Indian Institute of Technology

Guest Lecture: Introduction to Big Data at Indian Institute of Technology

Briefing room: An alternative for streaming data collection

Briefing room: An alternative for streaming data collection

Chattanooga Hadoop Meetup - Hadoop 101 - November 2014

Chattanooga Hadoop Meetup - Hadoop 101 - November 2014

Similar to Practical Petabyte Pushing

Similar to Practical Petabyte Pushing (20)

Data Engineer's Lunch #85: Designing a Modern Data Stack

Data Engineer's Lunch #85: Designing a Modern Data Stack

Data Engineer's Lunch #60: Series - Developing Enterprise Consciousness

Data Engineer's Lunch #60: Series - Developing Enterprise Consciousness

Audax Group: CIO Perspectives - Managing The Copy Data Explosion

Audax Group: CIO Perspectives - Managing The Copy Data Explosion

Google Cloud Computing on Google Developer 2008 Day

Google Cloud Computing on Google Developer 2008 Day

(ATS6-PLAT07) Managing AEP in an enterprise environment

(ATS6-PLAT07) Managing AEP in an enterprise environment

DM Radio Webinar: Adopting a Streaming-Enabled Architecture

DM Radio Webinar: Adopting a Streaming-Enabled Architecture

Accelerating workloads and bursting data with Google Dataproc & Alluxio

Accelerating workloads and bursting data with Google Dataproc & Alluxio

Deep learning beyond the learning - Jörg Schad - Codemotion Amsterdam 2018

Deep learning beyond the learning - Jörg Schad - Codemotion Amsterdam 2018

Powering Real-Time Big Data Analytics with a Next-Gen GPU Database

Powering Real-Time Big Data Analytics with a Next-Gen GPU Database

Apache Cassandra at Target - Cassandra Summit 2014

Apache Cassandra at Target - Cassandra Summit 2014

AWS re:Invent 2016: Automating Workflows for Analytics Pipelines (DEV401)

AWS re:Invent 2016: Automating Workflows for Analytics Pipelines (DEV401)

Denver devops : enabling DevOps with data virtualization

Denver devops : enabling DevOps with data virtualization

Off-Label Data Mesh: A Prescription for Healthier Data

Off-Label Data Mesh: A Prescription for Healthier Data

More from Chris Dagdigian

More from Chris Dagdigian (6)

2014 BioIT World - Trends from the trenches - Annual presentation

2014 BioIT World - Trends from the trenches - Annual presentation

Bio-IT Asia 2013: Informatics & Cloud - Best Practices & Lessons Learned

Bio-IT Asia 2013: Informatics & Cloud - Best Practices & Lessons Learned

Recently uploaded

💉💊+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHABI}}+971581248768

+971581248768 Mtp-Kit (500MG) Prices » Dubai [(+971581248768**)] Abortion Pills For Sale In Dubai, UAE, Mifepristone and Misoprostol Tablets Available In Dubai, UAE CONTACT DR.Maya Whatsapp +971581248768 We Have Abortion Pills / Cytotec Tablets /Mifegest Kit Available in Dubai, Sharjah, Abudhabi, Ajman, Alain, Fujairah, Ras Al Khaimah, Umm Al Quwain, UAE, Buy cytotec in Dubai +971581248768''''Abortion Pills near me DUBAI | ABU DHABI|UAE. Price of Misoprostol, Cytotec” +971581248768' Dr.DEEM ''BUY ABORTION PILLS MIFEGEST KIT, MISOPROTONE, CYTOTEC PILLS IN DUBAI, ABU DHABI,UAE'' Contact me now via What's App…… abortion Pills Cytotec also available Oman Qatar Doha Saudi Arabia Bahrain Above all, Cytotec Abortion Pills are Available In Dubai / UAE, you will be very happy to do abortion in Dubai we are providing cytotec 200mg abortion pill in Dubai, UAE. Medication abortion offers an alternative to Surgical Abortion for women in the early weeks of pregnancy. We only offer abortion pills from 1 week-6 Months. We then advise you to use surgery if its beyond 6 months. Our Abu Dhabi, Ajman, Al Ain, Dubai, Fujairah, Ras Al Khaimah (RAK), Sharjah, Umm Al Quwain (UAQ) United Arab Emirates Abortion Clinic provides the safest and most advanced techniques for providing non-surgical, medical and surgical abortion methods for early through late second trimester, including the Abortion By Pill Procedure (RU 486, Mifeprex, Mifepristone, early options French Abortion Pill), Tamoxifen, Methotrexate and Cytotec (Misoprostol). The Abu Dhabi, United Arab Emirates Abortion Clinic performs Same Day Abortion Procedure using medications that are taken on the first day of the office visit and will cause the abortion to occur generally within 4 to 6 hours (as early as 30 minutes) for patients who are 3 to 12 weeks pregnant. When Mifepristone and Misoprostol are used, 50% of patients complete in 4 to 6 hours; 75% to 80% in 12 hours; and 90% in 24 hours. We use a regimen that allows for completion without the need for surgery 99% of the time. All advanced second trimester and late term pregnancies at our Tampa clinic (17 to 24 weeks or greater) can be completed within 24 hours or less 99% of the time without the need surgery. The procedure is completed with minimal to no complications. Our Women's Health Center located in Abu Dhabi, United Arab Emirates, uses the latest medications for medical abortions (RU-486, Mifeprex, Mifegyne, Mifepristone, early options French abortion pill), Methotrexate and Cytotec (Misoprostol). The safety standards of our Abu Dhabi, United Arab Emirates Abortion Doctors remain unparalleled. They consistently maintain the lowest complication rates throughout the nation. Our Physicians and staff are always available to answer questions and care for women in one of the most difficult times in their lives. The decision to have an abortion at the Abortion Cl+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHA...

+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHA...?#DUbAI#??##{{(☎️+971_581248768%)**%*]'#abortion pills for sale in dubai@

Recently uploaded (20)

Why Teams call analytics are critical to your entire business

Why Teams call analytics are critical to your entire business

TrustArc Webinar - Unlock the Power of AI-Driven Data Discovery

TrustArc Webinar - Unlock the Power of AI-Driven Data Discovery

Repurposing LNG terminals for Hydrogen Ammonia: Feasibility and Cost Saving

Repurposing LNG terminals for Hydrogen Ammonia: Feasibility and Cost Saving

+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHA...

+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHA...

Boost Fertility New Invention Ups Success Rates.pdf

Boost Fertility New Invention Ups Success Rates.pdf

Understanding Discord NSFW Servers A Guide for Responsible Users.pdf

Understanding Discord NSFW Servers A Guide for Responsible Users.pdf

Scaling API-first – The story of a global engineering organization

Scaling API-first – The story of a global engineering organization

Bajaj Allianz Life Insurance Company - Insurer Innovation Award 2024

Bajaj Allianz Life Insurance Company - Insurer Innovation Award 2024

Workshop - Best of Both Worlds_ Combine KG and Vector search for enhanced R...

Workshop - Best of Both Worlds_ Combine KG and Vector search for enhanced R...

Polkadot JAM Slides - Token2049 - By Dr. Gavin Wood

Polkadot JAM Slides - Token2049 - By Dr. Gavin Wood

Apidays New York 2024 - The Good, the Bad and the Governed by David O'Neill, ...

Apidays New York 2024 - The Good, the Bad and the Governed by David O'Neill, ...

Apidays Singapore 2024 - Building Digital Trust in a Digital Economy by Veron...

Apidays Singapore 2024 - Building Digital Trust in a Digital Economy by Veron...

Axa Assurance Maroc - Insurer Innovation Award 2024

Axa Assurance Maroc - Insurer Innovation Award 2024

Cloud Frontiers: A Deep Dive into Serverless Spatial Data and FME

Cloud Frontiers: A Deep Dive into Serverless Spatial Data and FME

Practical Petabyte Pushing

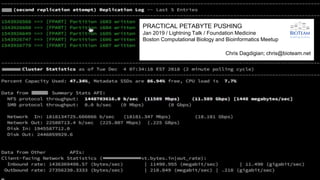

- 1. chris@bioteam.net / @chris_dag PRACTICAL PETABYTE PUSHING Jan 2019 / Lightning Talk / Foundation Medicine Boston Computational Biology and Bioinformatics Meetup Chris Dagdigian; chris@bioteam.net

- 2. chris@bioteam.net / @chris_dag 30 Second Background ● 24x7 Production HPC Environment ● 100s of user accounts; 10+ power users; 50+ frequent users ● Many integrated “cluster aware” commercial apps leverage this system ● ~2 petabytes scientific & user data (Linux & Windows clients) ● Multiple catastrophic NAS outages in 2018 ○ Demoralized scientists; shell-shocked IT staff; angry management ○ Replacement storage platform procured; 100% NAS-to-NAS migration ordered ● Mandate / Mission - 2 petabyte live data migration ○ IT must re-earn trust and confidence of scientific end-users & leadership ○ User morale/confidence is low; Stability/Uptime is key; Zero Unplanned Outages ○ “Jobs must flow” -- HPC remains in production during data migration

- 3. chris@bioteam.net / @chris_dag 1. NEVER comingle “data management” & “data movement” at same time Cleanup/manage your data BEFORE or AFTER; never DURING 2. Understand upfront vendor-specific data protection overhead (small files esp) New NAS needed +20% more raw disk to store the same data, a non-trivial CapEx cost at petascale 3. Interrogate/Understand your data before you move it (or buy new storage!) Massive replication bandwidth is meaningless if you have 200+ million tiny files; This was our real-world data movement bottleneck Lightning Talk ProTip: CONCLUSIONS FIRST Things we already knew + things we wished we knew beforehand

- 4. chris@bioteam.net / @chris_dag Lightning Talk ProTip: CONCLUSIONS FIRST 4. Be proactive in setting (and re-setting) management expectations Data transfer time estimates based off of aggregate network bandwidth were insanely wrong. Real world throughput range was: [ 2mb/sec -- 13GB/sec ] 5. Tasks that take days/weeks require visibility & transparency Users & management will want a dashboard or progress view 6. Work against full filesystems or network shares ONLY (See tip #1 …) Attempts to get clever with curated “exclude-these-files-and-folders” lists add complexity and introduce vectors for human/operator error Things we already knew + things we wished we knew beforehand

- 5. chris@bioteam.net / @chris_dag Materials & Methods - Tooling Tooling ● We are not special/unique in life science informatics - plagiarizing methods from Amazon, supercomputing sites & high-energy physics is a legit strategy ● Our tooling choice: fpart/fpsync from https://github.com/martymac/fpart ○ ‘fpart’ - Does the hard work of filesystem crawling to build ‘partition’ lists that can be used as input data for whatever tool you want to use to replicate/copy data ○ ‘fpsync’ - Wrapper script to parallelize, distribute and manage a swarm of replication jobs ○ ‘rsync’ - https://rsync.samba.org/ ● Actual data replication via ‘rsync’ (managed by fpsync) ○ fpsync wrapper script is pluggable and supports different data mover/copy binaries ○ We explicitly chose ‘rsync’ because it is well known, well tested and had the least amount of potential edge and corner-cases to deal with Things we already knew + things we wished we knew beforehand

- 6. chris@bioteam.net / @chris_dag Materials & Methods - Process The Process (one filesystem or share at a time): ● [A] Perform initial full replication in background on live “in-use” file system ● [B] Perform additional ‘re-sync’ replications to stay current ● [C] Perform ‘delete pass’ sync to catch data that was deleted from source filesystem while replication(s) were occuring ● Repeat tasks [B] and [C] until time window for full sync + delete-pass is small enough to fit within an acceptable maintenance/outage window ● Schedule outage window; make source filesystem Read-Only at a global level; perform final replication sync; migrate client mounts; have backout plan handy ● Test, test, test, test, test, test (admins & end-users should both be involved testing) ● Have a plan to document & support the previously unknown storage users that will come out of the woodwork once you mark the source filesystem read/only (!) Things we already knew + things we wished we knew beforehand

- 7. chris@bioteam.net / @chris_dag Wrap Up Commercial Alternative ● If management requires fancy live dashboards & other UI candy --OR-- you have limited IT/ops support available for scripted OSS tooling support … ● You can purchase petascale data migration capability commercially ○ Recommendation: Talk to DataDobi (https://datadobi.com) ○ (Yes this is a different niche than IBM Aspera or GridFTP type tooling …) Acknowledgements ● Aaron Gardner (aaron@bioteam.net) ○ One of several Bioteam infrastructure gurus with extreme storage & filesystem expertise ○ He did the hard work on this ○ I just scripted things & monitored progress #lazy More Info/Details: If you want to see this topic expanded into a long-form blog post / technical write-up or BioITWorld conference talk then please let me know via email!