Melden

Teilen

Downloaden Sie, um offline zu lesen

Empfohlen

Empfohlen

Weitere ähnliche Inhalte

Was ist angesagt?

Was ist angesagt? (11)

Tips and tricks to win kaggle data science competitions

Tips and tricks to win kaggle data science competitions

Presentation on BornoNet Research Paper and Python Basics

Presentation on BornoNet Research Paper and Python Basics

Corinna Cortes, Head of Research, Google, at MLconf NYC 2017

Corinna Cortes, Head of Research, Google, at MLconf NYC 2017

Ähnlich wie Neural Networks

https://github.com/telecombcn-dl/dlmm-2017-dcu

Deep learning technologies are at the core of the current revolution in artificial intelligence for multimedia data analysis. The convergence of big annotated data and affordable GPU hardware has allowed the training of neural networks for data analysis tasks which had been addressed until now with hand-crafted features. Architectures such as convolutional neural networks, recurrent neural networks and Q-nets for reinforcement learning have shaped a brand new scenario in signal processing. This course will cover the basic principles and applications of deep learning to computer vision problems, such as image classification, object detection or text captioning.Training Deep Networks with Backprop (D1L4 Insight@DCU Machine Learning Works...

Training Deep Networks with Backprop (D1L4 Insight@DCU Machine Learning Works...Universitat Politècnica de Catalunya

Ähnlich wie Neural Networks (20)

Activation functions and Training Algorithms for Deep Neural network

Activation functions and Training Algorithms for Deep Neural network

Neural network based numerical digits recognization using nnt in matlab

Neural network based numerical digits recognization using nnt in matlab

Machine Learning Essentials Demystified part2 | Big Data Demystified

Machine Learning Essentials Demystified part2 | Big Data Demystified

Training Deep Networks with Backprop (D1L4 Insight@DCU Machine Learning Works...

Training Deep Networks with Backprop (D1L4 Insight@DCU Machine Learning Works...

PPT - AutoML-Zero: Evolving Machine Learning Algorithms From Scratch

PPT - AutoML-Zero: Evolving Machine Learning Algorithms From Scratch

Introduction to Machine Learning for Java Developers

Introduction to Machine Learning for Java Developers

Mehr von mailund

Mehr von mailund (20)

Chapter 5 searching and sorting handouts with notes

Chapter 5 searching and sorting handouts with notes

Chapter 4 algorithmic efficiency handouts (with notes)

Chapter 4 algorithmic efficiency handouts (with notes)

Chapter 3 introduction to algorithms handouts (with notes)

Chapter 3 introduction to algorithms handouts (with notes)

Kürzlich hochgeladen

A Principled Technologies deployment guide

Conclusion

Deploying VMware Cloud Foundation 5.1 on next gen Dell PowerEdge servers brings together critical virtualization capabilities and high-performing hardware infrastructure. Relying on our hands-on experience, this deployment guide offers a comprehensive roadmap that can guide your organization through the seamless integration of advanced VMware cloud solutions with the performance and reliability of Dell PowerEdge servers. In addition to the deployment efficiency, the Cloud Foundation 5.1 and PowerEdge solution delivered strong performance while running a MySQL database workload. By leveraging VMware Cloud Foundation 5.1 and PowerEdge servers, you could help your organization embrace cloud computing with confidence, potentially unlocking a new level of agility, scalability, and efficiency in your data center operations.Deploy with confidence: VMware Cloud Foundation 5.1 on next gen Dell PowerEdg...

Deploy with confidence: VMware Cloud Foundation 5.1 on next gen Dell PowerEdg...Principled Technologies

Kürzlich hochgeladen (20)

Repurposing LNG terminals for Hydrogen Ammonia: Feasibility and Cost Saving

Repurposing LNG terminals for Hydrogen Ammonia: Feasibility and Cost Saving

Axa Assurance Maroc - Insurer Innovation Award 2024

Axa Assurance Maroc - Insurer Innovation Award 2024

Scaling API-first – The story of a global engineering organization

Scaling API-first – The story of a global engineering organization

From Event to Action: Accelerate Your Decision Making with Real-Time Automation

From Event to Action: Accelerate Your Decision Making with Real-Time Automation

The 7 Things I Know About Cyber Security After 25 Years | April 2024

The 7 Things I Know About Cyber Security After 25 Years | April 2024

Workshop - Best of Both Worlds_ Combine KG and Vector search for enhanced R...

Workshop - Best of Both Worlds_ Combine KG and Vector search for enhanced R...

HTML Injection Attacks: Impact and Mitigation Strategies

HTML Injection Attacks: Impact and Mitigation Strategies

TrustArc Webinar - Unlock the Power of AI-Driven Data Discovery

TrustArc Webinar - Unlock the Power of AI-Driven Data Discovery

Deploy with confidence: VMware Cloud Foundation 5.1 on next gen Dell PowerEdg...

Deploy with confidence: VMware Cloud Foundation 5.1 on next gen Dell PowerEdg...

Why Teams call analytics are critical to your entire business

Why Teams call analytics are critical to your entire business

Apidays New York 2024 - Scaling API-first by Ian Reasor and Radu Cotescu, Adobe

Apidays New York 2024 - Scaling API-first by Ian Reasor and Radu Cotescu, Adobe

Connector Corner: Accelerate revenue generation using UiPath API-centric busi...

Connector Corner: Accelerate revenue generation using UiPath API-centric busi...

Bajaj Allianz Life Insurance Company - Insurer Innovation Award 2024

Bajaj Allianz Life Insurance Company - Insurer Innovation Award 2024

Powerful Google developer tools for immediate impact! (2023-24 C)

Powerful Google developer tools for immediate impact! (2023-24 C)

ProductAnonymous-April2024-WinProductDiscovery-MelissaKlemke

ProductAnonymous-April2024-WinProductDiscovery-MelissaKlemke

Automating Google Workspace (GWS) & more with Apps Script

Automating Google Workspace (GWS) & more with Apps Script

Neural Networks

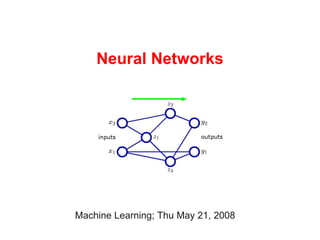

- 1. Neural Networks Machine Learning; Thu May 21, 2008

- 2. Motivation Problem: With our linear methods, we can train the weights but not the basis functions: Activator Fixed basis Trainable function weight

- 3. Motivation Problem: With our linear methods, we can train the weights but not the basis functions: Can we learn the basis functions?

- 4. Motivation Problem: With our linear methods, we can train the weights but not the basis functions: Can we learn the basis functions? Maybe reuse ideas from our previous technology? Adjustable weight vectors?

- 5. Historical notes This motivation is not how the technology came about! Neural networks were developed in an attempt to achieve AI by modeling the brain (but artificial neural networks have very little to do with biological neural networks).

- 6. Feed-forward neural network Two levels of “linear regression” (or classification): Output layer Hidden layer

- 8. Example tanh activation function for 3 hidden layers Linear activation function for output layer

- 9. Example tanh activation function for 3 hidden layers Linear activation function for output layer

- 10. Example tanh activation function for 3 hidden layers Linear activation function for output layer

- 11. Example tanh activation function for 3 hidden layers Linear activation function for output layer

- 12. Example tanh activation function for 3 hidden layers Linear activation function for output layer

- 13. Network training If we can give the network a probabilistic interpretation, we can use our “standard” estimation methods for training.

- 14. Network training If we can give the network a probabilistic interpretation, we can use our “standard” estimation methods for training. Regression problems:

- 15. Network training If we can give the network a probabilistic interpretation, we can use our “standard” estimation methods for training. Classification problems:

- 16. Network training If we can give the network a probabilistic interpretation, we can use our “standard” estimation methods for training. Training by minimizing an error function.

- 17. Network training If we can give the network a probabilistic interpretation, we can use our “standard” estimation methods for training. Training by minimizing an error function. Typically, we cannot minimize analytically but need numerical optimization algorithms.

- 18. Network training If we can give the network a probabilistic interpretation, we can use our “standard” estimation methods for training. Training by minimizing an error function. Typically, we cannot minimize analytically but need numerical optimization algorithms. Notice: There will typically be more than one minimum of the error function (due to symmetries). Notice: At best, we can find local minima.

- 19. Parameter optimization Numerical optimization is beyond the scope of this class – you can (should) get away with just using appropriate libraries such as GSL.

- 20. Parameter optimization Numerical optimization is beyond the scope of this class – you can (should) get away with just using appropriate libraries such as GSL. These can typically find (local) minima of general functions, but the most efficient algorithms also need the gradient of the function to minimize.

- 21. Back propagation Back propagation is an efficient algorithm for computing both the error function and the gradient.

- 22. Back propagation Back propagation is an efficient algorithm for computing both the error function and the gradient. For iid data:

- 23. Back propagation Back propagation is an efficient algorithm for computing both the error function and the gradient. For iid data: We just focus on this guy

- 24. Back propagation After running the feed-forward algorithm, we know the values zi and yk. we need to compute the ds (called errors).

- 25. Back propagation The choice of error function and output activator typically makes it simple to deal with the output layer

- 26. Back propagation For hidden layers, we apply the chain rule

- 27. Calculating error and gradient Algorithm 1. Apply feed forward to calculate hidden and output variables and then the error. 2. Calculate output errors and propagate errors back to get the gradient. 3. Iterate ad lib.

- 28. Calculating error and gradient Algorithm 1. Apply feed forward to calculate hidden and output variables and then the error. 2. Calculate output errors and propagate errors back to get the gradient. 3. Iterate ad lib.

- 29. Calculating error and gradient Algorithm 1. Apply feed forward to calculate hidden and output variables and then the error. 2. Calculate output errors and propagate errors back to get the gradient. 3. Iterate ad lib.

- 30. Calculating error and gradient Algorithm 1. Apply feed forward to calculate hidden and output variables and then the error. 2. Calculate output errors and propagate errors back to get the gradient. 3. Iterate ad lib.

- 31. Calculating error and gradient Algorithm 1. Apply feed forward to calculate hidden and output variables and then the error. 2. Calculate output errors and propagate errors back to get the gradient. 3. Iterate ad lib.

- 32. Calculating error and gradient Algorithm 1. Apply feed forward to calculate hidden and output variables and then the error. 2. Calculate output errors and propagate errors back to get the gradient. 3. Iterate ad lib.

- 33. Calculating error and gradient Algorithm 1. Apply feed forward to calculate hidden and output variables and then the error. 2. Calculate output errors and propagate errors back to get the gradient. 3. Iterate ad lib.

- 34. Calculating error and gradient Algorithm 1. Apply feed forward to calculate hidden and output variables and then the error. 2. Calculate output errors and propagate errors back to get the gradient. 3. Iterate ad lib.

- 35. Overfitting The complexity of the network is determined by the hidden layer – and overfitting is still a problem. Use e.g. test datasets to alleviate this problem.

- 36. Early stopping ...or just stop the training when overfitting starts to occur. Training data Test data

- 37. Summary ● Neural network as general regression/classification models ● Feed-forward evaluation ● Maximum-likelihood framework ● Error back-propagation to obtain gradient