Poster: FOX

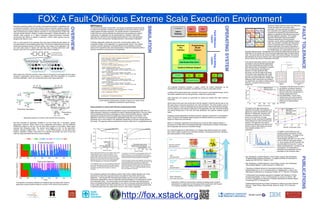

- 1. FOX: A Fault-Oblivious Extreme Scale Execution Environment Checksum-based schemes ensure fault-tolerance SST/macro SIMULATION OPERATING SYSTEM Exascale computing systems will provide a thousand-fold increase in parallelism and FAULT TOLERANCE OVERVIEW The Structural Simulation Toolkit (SST) macroscale components provide the ability of read-only data with much lower space a proportional increase in failure rate relative to today's machines. Systems software % ls /data/dht_A to explore the interaction of software and hardware for full scale machines using a ... App 1 ... App i overheads. We have extended the approaches to for exascale machines must provide the infrastructure to support existing applications the computation and mapping of parities to Fault Oblivious while simultaneously enabling efficient execution of new programming models that coarse-grained simulation approach. The parallel machine is represented by User's processors as employed by fault tolerant linear naturally express dynamic, adaptive, irregular computation; coupled simulations; and models which are used to estimate the performance of processing and network Desktop Application Workflow algebra, an algorithmic fault tolerance technique massive data analysis in a highly unreliable energy-constrained hardware components. An application can be represented by a “skeleton” code which Layers for linear algebraic operations. The scalable environment with billions of threads of execution. Further, these systems must be replicates the control flow and message passing behavior of the real application algorithms developed support generalized designed with failure in mind. without the cost of doing actual message passing or heavyweight computation. Cartesian distributions involving arbitrary processor counts, arbitrary specification of failure FOX is a new system for the exascale which will support distributed data objects as A skeleton application models the control flow, communication pattern, and units, and colocation of parities with data blocks. first class objects in the operating system itself. This memory-based data store will be computation pattern of the application in a coarse-grained manner. This method In the 2-D processor grid in the figure, note the named and accessed as part of the file system name space of the application. We provides a powerful approach to evaluate efficiency and scalability at extreme Abstract Programming irregular distribution of processors (denoted by can build many types of objects with this data store, including data-driven work scales and to experiment with code reorganization or high-level refactoring without File Model P_(i,j)) among the failure units (denoted by N_k). queues, which will in turn support applications with inherent resilience. having to rewrite the numerical part of an application. Interface Support For modified data, we create shadow copies of important data structures. All operations that modify the original data structures are Coarse-grained duplicated on the shadow data. By mirroring the application data on distinct failure operation queue Fault Hiding units, we ensure that we can recover its state in the event of failures. We ensured // Set up the instructions object to tell the processor model Task // how many fused multiply-add instructions each compute call executes fault tolerance for the key matrices in the most expensive Hartree-Fock procedure Layers boost::shared_ptr<sstmac::eventdata> instructions = Management (shown above) with almost unnoticeable overheads. sstmac::eventdata::construct(); Fine- instructions->set_event("FMA",blockrowsize*blockcolsize*blocklnksize); grained queues // Iterate over number of remote row and column blocks Distributed Data Store Fault tolerant data stores (shown to the right) for (int i=0; i<nblock-1; i++) { are based on the premise that applications Preload std::vector<sstmac::mpiapi::mpirequest_t> reqs; queues // Begin non-blocking left shift of A blocks have a few critical data structures and that Ready sstmac::mpiapi::mpirequest_t req; Systems Software Support each data structure is accessed in a specific queues mpi()->isend(blocksize, sstmac::mpitype::mpi_double, form in a given phase. By limiting our sstmac::mpiid(myleft), sstmac::mpitag(0), world, req); reqs.push_back(req); attention to certain significant data Compute Resource Compute Resource mpi()->irecv(blocksize, sstmac::mpitype::mpi_double, structures, we limit the state to be saved. sstmac::mpiid(myright), sstmac::mpitag(0), world, req); The access mode associated with a data Work queues are a familiar concept in many areas of computing; we will apply the work queue reqs.push_back(req); App 1 App 2 App 1 dht_A dht_A.part1 ... structure in a given phase can be used to concept to applications. Shown below is a graphical data flow description of a quantum // Begin non-blocking down shift of B blocks task 1 task 3 task n partn chemistry application, which can be executed using a work queue approach. sstmac::mpiapi::mpirequest_t req; tailor the form of the data store. Rather than mpi()->isend(blocksize, sstmac::mpitype::mpi_double, treating an SMP node as the unit of failure, taskdata. sstmac::mpiid(myup), sstmac::mpitag(0), world, req); matrix_C.part3 task management data.part1 reqs.push_back(req); node 0 partn node n our approaches can adapt to arbitrary failure mpi()->irecv(blocksize, sstmac::mpitype::mpi_double, units (eg., processors sharing a power sstmac::mpiid(mydown), sstmac::mpitag(0), world, req); supply unit). G G G G G G reqs.push_back(req); // Simulate computation with current blocks The proposed framework provides a simple, uniform file system abstraction as the 10 4 The graph to the left shows the cost of G(2,2,2,2) G(2,2,1,1) G(2,2,0,0) D(2,2) D(0,0) D(1,0) D(1,1) D(2,0) D(2,1) G(0,0,0,0) G(1,1,0,0) G(1,1,1,1) compute_api()->compute(instructions); NWChem twoel two electron contribution (twoel) in F F F F F F std::vector<sstmac::mpiapi::const_mpistatus_t> statuses; mechanism for parallel computation and distribution of data to computation blocks. NWChem twoel + FT NWChem Hartree-Fock calculation Time per step (s) // Wait for data needed for next iteration 3 10 with and without the maintenance of F(0,0) F(1,0) F(1,1) S(0,0) S(1,0) S(1,1) F(i,i) F(i,j) F(j,j) S(i,i) S(i,j) S(j,j) mpi()->waitall(reqs, statuses); Locating and accessing tasks from a processʼs work queue is accomplished through normal G J(0) } shadow copies (fock matrix G J // Simulate computation with blocks received during last loop iteration file system operations such as listing directory contents and opening files. 102 G(i,j,k,l) J F(2,1) F(2,0) J(0,1,0) S(2,1) S(2,0) compute_api()->compute(instructions); size=3600x3600). The two lines J(d,i,j) R(0) R(0) R(0) R(0) R(0) R(0) R(0) R(0) R(0) R(0) Data distribution and access are performed by writing and reading files within directory overlap due to the small overhead 101 and the included error bars are not F(2,2) Fi(0,1,2,1) Fi(0,1,1,1) S(2,2) Si(0,1,2,1) Si(0,1,1,1) hierarchies. D(k,l) G(i,j,k,l) G(i,k,j,l) Fi(0,1,2,0) J(0) Si(0,1,2,0) Si(0,1,0,0) A code fragment implementing a skeleton program for a dense matrix visible due to small standard multiplication (restricted to square blocks). 0 F F F(i,i) F(i,j) F(j,j) J(d,i,j) Assembly Shown above is the user view (at the top) of the file /data/dht_A and the way the view of one 10 deviation. F(i,j) Fi(0,1,1,0) J(0,2,1) Si(0,1,1,0) 32 64 128 256 512 1024 2048 R file maps down to resources provided by one node (at bottom). The node contains a part of Fi R(0) R(0) R(0) R(0) R(0) Number of processors Fi(d,s,i,j) Fi(0,1,2,2) Fi(0,2,2,0) Fi(0,2,1,1) Si(0,1,2,2) Si(0,2,2,0) Fi(0,1,0,0) Using simulation to explore fault oblivious programming models the file /data/dht_A, which is distributed across several nodes, with redundant block storage. The graph to the right shows time to 6 The OS supports a fault oblivious abstraction to the application and user, with fault handling 10 Elementary Operations J(0) determine the location and distribution of ALL-ROW Major effort is required to rewrite an application using a new programming model, thus it is and hiding infrastructure in the work and data distribution and systems software support Compute time (µs) PARTIAL-ROW J(0,2,0) Dependency highly desirable to attempt to understand the ease of expressing algorithms in the new model layers. parities for a given data distribution (and 10 5 ALL-SYM R(0) R(0) and the expected performance advantages on future machines before doing so. Skeleton different FT distribution schemes) with PARTIAL-SYM Graph Fi(0,2,2,2) Fi(0,2,0,0) applications provide the basis for programming model exploration in SST/macro. The Emerging exascale applications will feature dynamic mapping of resources to computational increase in process count. This time is 10 4 Dependency graph for a portion of the Hartree-Fock procedure. application control flow is represented by lightweight threads and each of these threads nodes. The goal of the Scalable Elastic Systems Architecture (SESA) is to provide system shown to be small even for large represents one or more threads in the application (depending on the level of detail desired in support for these new environments. processor counts, showing the feasibility 3 10 the model). This approach allows programmers to specify control flow in a straightforward way. of this approach on future extreme scale We have simulated an application modified to use this model and, in simulation, parallel SESA is a multi-layer organization that exploits prior results for SMP scalable software. It systems. This approach was used to 2 compute the checksums and recover lost 10 performance improves. Shown below is the comparative performance, with the traditional introduces an infrastructure for developing application-tuned libraries of elastic components 210 212 214 216 218 SPMD-style program on the top, and the data-driven program on the bottom. White space that encapsulate and account for hardware change. blocks on failure. Both actions are shown to be scalable (details in CFʼ11 Number of processor cores indicates idle computing nodes. The second cycle begins at t=16.7 on the data-driven 1.00 1.00 program, whereas it begins later -- at t=16.9 -- on the SPMD program. On the data driven Our LibraryOS approach to SESA allows us to collapse deep software stacks into a single publication). program, utilization is higher, cycle times for an iteration are shorter, and work on the next layer which is tightly coupled to the underlying hardware, increasing performance, efficiency, In addition to performance we are 0.80 0.80 cycle can begin well before the current cycle completes. and lowering latency of key operations. studying energy consumption of fault Parallel Efficiency Parallel Efficiency tolerance techniques. The graph to the 0.60 0.60 left is the power signature of HF implementation with 4Be on a rack of 224 0.40 0.40 cores on NW-ICE (with liquid cooling; Crossbar (DS) Fat-tree (DS) i.e., local cooling and PSU included). We 0.20 Torus Torus (DS) 0.20 Theoretically optimal scaling use time dilation to show the 11 power Expected without load balancing Degraded Fat-tree (DS) Simple dynamic load balancing spikes representing the 11 iterations until Degraded Torus 0.00 0.00 Systolic algorithm convergence. The initial spike is 102 103 104 105 106 Number of Cores 1 4 16 64 256 1024 4096 attributed to the initialization and the Number of Nodes generation of the Schwartz matrix. Weak scaling parallel efficiency of the systolic matrix-matrix A comparison of the effect of a single degraded node on the multiply algorithm under a variety of conditions: 1) full parallel efficiency of an actor-based algorithm with simple crossbar network (DS), 2) fat-tree formed from radix 36 dynamic load-balancing and the traditional systolic algorithm. PUBLICATIONS switches (DS), 3) 2D torus network, 4) 2D torus network • Dan Schatzberg, Jonathan Appavoo, Orran Krieger, and Eric Van Hensbergen, A fat-tree formed from radix 36 switches was simulated. (DS),5) fat-tree formed from radix 36 switches with a single “Scalable Elastic Systems Architecture”, To appear at RESOLVE Workshop co- degraded node (DS), 6) 2D torus with a single degraded In both the systolic and direct send algorithm, the full matrix is located with ASPLOS 2011, March 5, 2011. node. DS designates use of the direct send algorithm. evenly decomposed onto all nodes in the parallel system. The systolic algorithm diffuses data between nodes as the • Dan Schatzberg, Jonathan Appavoo, Orran Krieger, and Eric Van Hensbergen, In the “degraded node” runs, the computational performance calculation progresses while the direct send (DS) algorithm of one node was reduced to half that of the other nodes in fetches data directly from the neighbor node that owns the “Why Elasticity Matters”, Under submission to HotOS 2011. the system. This type of performance reduction is found in given submatrix. The actor model implementation uses the real systems due to thermal ramp-down or resource contention.