Jeff Viaud Poster Final Edition

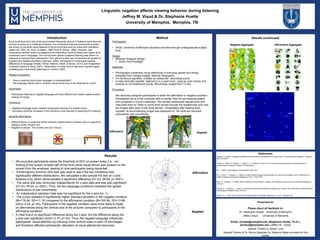

- 1. Introduction Eyes move around to look at the environment frequently (around 3 fixations every second) and this is driven by a multitude of factors. It is understood that eye movements at times are driven by low-level visual features of the environment such as colors and orientation biases (Itti, 2007; Itti, Koch, & Niebur, 1998; Koch & Ullman, 1998). However, eye movements are also driven by category-level information (such as faces) and higher-level contextual cues in language. The current work utilizes a passive listening task where no response is required from participants. Even without a task, eye movements are guided by linguistic and spatial information (Altmann, 2004), simulations of implicit grammatical differences in language (Huette, Winter, Matlock, Ardell, & Spivey, 2014), and imagination of scenes (Spivey & Geng, 2001). Observation of these kind of high-level cognitive goals mediating eye movements dates back to Yarbus (1967). Research Questions • How is meaning found when language is underspecified? • Will language interact with a complex visual scene even in the absence of a task? Hypotheses • Participants listening to negated language will have different eye fixation patterns when viewing an image. Predictions • Negated language would mediate subsequent viewing of a related scene. • Saccades would be increased in the directions most relevant to searching for meaning. Semantic Alternatives • Different terms or words that share semantic relationships to another word in regards to category, traits, location, etc. • Negation Example: The cookies are not in the jar. Presented at: Please direct all feedback to: Dr. Stephanie Huette University of Memphis Jeffery Viaud University of Memphis Email: shuette@memphis.edu (Stephanie Huette, Ph.D.); jmviaud@memphis.edu (Jeffery M. Viaud) Special Thanks to Jenee’ Love Special Thanks to Dr. Bonny Banerjee for Saliency Maps provided for this poster Results Results (continued) . Method Participants • N=25, University of Memphis Students recruited through undergraduate subject pool. Design • Between-Subjects design • Audio First Paradigm Materials • Photographs containing visual references of everyday places and things extracted from Google images, Natural Geographic. • 3-4 Sentence vignettes, created by researcher, describing scene. • A male recorded vignette listened to in a quiet room, using an even tempo and prosody to not emphasize words. Recordings ranged from 13-22s Procedure We randomly assigned participants to either the affirmative or negated condition. Participants sat at a Dell computer with a remote Tobii X2 eye tracking system, and completed a 9-point calibration. We handed participants headphones and instructed them to “listen to some short stories through the headphones and view the images after each of the short stories”. Immediately after hearing each vignette, its accompanying image was displayed for 15s while we recorded participants’ eye movements. Linguistic negation affects viewing behavior during listening Jeffrey M. Viaud & Dr. Stephanie Huette University of Memphis, Memphis, TN References Altmann, G. (2004). Language-mediated eye movements in the absence of a visual world: The blank screen paradigm’. Cognition, 93(2), B79-B87. Huette, S., Winter, B., Matlock, T., Ardell, D. H., & Spivey, M. (2014). Eye movements during listening reveal spontaneous grammatical processing. Frontiers in Psychology, 5. Itti, L. (2007). Visual salience. Scholarpedia, 2(9), 3327. Itti, L., Koch, C., & Niebur, E. (1998). A model of saliency-based visual attention for rapid scene analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence, 20(11), 1254-1259. Koch, C., & Ullman, S. (1998). Shifts in selective visual attention: Towards the underlying neural circuitry, Human Neurobiology, 4, 219–227. Spivey, M. J., & Geng, J. J. (2001). Oculomotor mechanisms activated by imagery and memory: Eye movements to absent objects. Psychological Research, 65(4), 235-241. Yarbus, A. L. (1967). Eye movements during perception of complex objects (pp. 171-211). Springer US. X- - - - - - - - - - - - - - - - -- • We excluded participants below the threshold of 50% on screen looks (i.e., not looking at the screen at least half of the time) while visual stimuli was present on the screen from the analyses, leading to nine participants being discarded. • A Kolmogorov-Smirnov (KS) test was used to see if the two conditions had significantly different distributions. We calculated a two sample KS test on x-axis fixations only, which demonstrated a significant difference (D=.03, df=24, p<.0001). • The same test was conducted independently for y-axis data and was also significant (D=.03, df=24, p<.0001). Thus, the two language conditions mediated the spatial distributions of eye-movements. • An independent samples t-test was not significant for the x-axis (p>.1). • The y-axis revealed a significantly higher standard deviation in the negated condition (M=178.94, SD=11.16) compared to the affirmative condition (M=165.99, SD=13.86; t(20)=-2.43, p<.05). Participants in the negated condition were more likely to fixate on alternatives along the vertical axis of the pictures compared to participants in the affirmative condition. • A t-test found no significant difference along the x-axis, but the difference along the y-axis was significant (t(23)=-2.75, p<.05). Thus, the negated language influenced participants’ visual attention by inducing more vertical looks in each of the images and therefore affected participants’ allocation of visual attentional resources. Affirmative Negated Affirmative Negated Negated Aggregate Affirmative Aggregate Affirmative Negated